The optimization, as told by Tim

Arcanum was way bigger than fallout, we did it with 4 programmers. It was set in an open world which was huge. So we were trying to deal with not only how to store all that, but also how to do calculates over, like, creatures. Because, there could be tens of thousands, or hundreds of thousands of creatures if we tried to load every creature in the instance you were in. If you were out in the terrain in the open map, we couldn't possibly load every sector, so we had to load things in and out and that's where the optimization came in.

...

In Arcanum, we did something called object compaction. Where an object only stored how it differed from the prototype it was based on. For many things, that prototype stored almost everything. So if you had an item like a dagger, or, an object like a zombie. Almost everything you need to know about that creature is stored in the prototype. How many hitpoints it has total, how it does attacks, what art to use, all that is stored in the prototype. Typically, the only thing that changed between the object and the prototype was where it was located in the world, which might be in the world or in the inventory, and it's current hitpoints. The maximum or whatever was in the prototype, unless of course the designer wanted to drop it down and say these are really tough zombies, I'm going to give them 50% more hit points. Also items would store their current number of charges, creatures would store ability use, like if there was an ability they could only use once every 30 seconds then the timer would of course be part of the object.

What we did, and a lot of this was done by Mark Harrison who's one of the programmers, he used bitfields to indicate in an object, what fields were present in that object. And if they weren't present, then go to the prototype and get it. So lets say you wanted to look up hitpoints, and that was the 4th field that would have been on the object if we were storing all of it. So you look and you count in, skip over the first 3 bits, and if it's a 1 then that means you grab it from here, in the object, if it's a 0, then that means go grab it from the prototype.

Now if the first 3 fields were zero, that meant the first field in the object was hitpoints. If any of those first three weren't 0, then you'd have to skip over a certain number of fields to get to the hitpoints. Mark made a whole thing, I think it was called BigBits, that stored an array, so you could have bits as long as you wanted, you weren't restricted to 32, and we either knew how big they were gonna be, or that was stored somewhere so you knew how many bytes to skip if fields existed in the object but you were looking for a field later.

Here's what I wanted to point out, all of that stuff. The big bits you have to store. That was extra memory that was in each object, but even with that bit field overhead, we saved SO much memory, because there were tons of objects in the world that were used a ton. But almost never differed from the prototype. Walls, props like trees and rocks, those almost always just varied from the prototype by their location. Everything else about it, the art is used, its maximum hitpoints, everything were never varied. This was true for items and monsters as well. So all the swords in the game, there was one sword prototype, and it stored everything you needed about a sword. What animation to use, what it looked like in inventory, how much damage it did, all of that. The only thing that varied, usually, if someone had a sword, was where it was located and if it was damaged at all.

Now if you wanted to make a +1 sword, you had two options. You could say here's the sword prototype and heres the plus one sword that varies because its 2 hit field it has a plus one in it isntead of a 0, maybe the damage also has a plus one. That's one way to do it, or you could make a prototype, if you think you're going to have a lot of +1 swords then you coudl make a +1 sword prototype and then they could all refer to that. It was basically a tradeoff, on how often, if an item was going to be used a lot? Make a prototype. Otherwise, just store the delta from the base prototype and you're done. Monsters were the same way, if the monster used most of the same art, almost all the same abilities and all that, just delta it from the prototype.

Pretty neat sounding right? Offseting by a certain amount to fetch the fields out is cool, but not something one does in a higher level language like Java. That said, the principal of what he's talking about, delegating out to a prototype for commonly shared properties instead of duplicating the data, is one that we can take advantage of in Java.

Creating the LibGDX project

As usual with these little side gaming projects, I'm going to use LibGDX for this. So, running the usual launcher from the wiki we can generate the project very quickly:

I left HTML checked as a platform in the screenshot above because I thought that maybe I'd be able to create something that we

could embed onto the website here. But,

unsurprisingly, and spoiler I suppose, calling out to Runtime in Java to get the current amount of memory is not supported

by the HTML translation of the java code. That said, we can take the generated code and then proceed to add a couple tweaks to it. First

off, let's add an FPS counter since it'd be neat to see if the FPS changes based on the optimization we'll do. GDX sets us up with a game

that extends the ApplicationAdapter, so we'll be tweaking the render method directly:

@Override

public void render () {

int fps = Gdx.graphics.getFramesPerSecond();

ScreenUtils.clear(1, 0, 0, 1);

batch.begin();

batch.draw(img, 0, 0, 30f, 30f);

font.draw(batch, ""+ fps, 500f, 460f); // <-- we added this

batch.end();

}

And we've got a cool 60FPS with our default splashscreen image. I'm going to just keep out window size set to 640 by 480 for simplicity, and not bother with a real camera projection or world units for this experiment. We really just need to make a bunch of objects and compare how the memory pressure looks between each. To make this more interesting than looking at the bright red image, let's create a sprite and then similar to how Tim described, we'll make a bunch of objects that have stuff in them and render each one out. So, here's our sprite:

And with a couple of lines of initialization in the create method, and an update to the render method

@Override

public void create () {

batch = new SpriteBatch();

img = new Texture("badlogic.jpg");

sprite = new Texture("sprite.png");

font = new BitmapFont();

font.setColor(Color.BLACK);

font.setUseIntegerPositions(true);

}

@Override

public void render () {

int fps = Gdx.graphics.getFramesPerSecond();

ScreenUtils.clear(1, 0, 0, 1);

batch.begin();

batch.draw(img, 0, 0);

batch.draw(sprite, 320, 240);

font.draw(batch, ""+ fps, 500f, 460f);

batch.end();

}

We have our little energy ball on the screen:

Great. So now that we can visualize an object, let's visualize how much memory we have in use.

We can fetch how much total memory the JVM is using with the Runtime class, so let's

wrap it up in a tiny little object:

package spaces.peetseater.experiment;

class Memory {

private long totalMemory;

private long usedSoFar;

private final Runtime runtime;

public Memory() {

runtime = Runtime.getRuntime();

totalMemory = runtime.totalMemory();

calculateMemory();

}

public void calculateMemory() {

totalMemory = runtime.totalMemory();

usedSoFar = (totalMemory - runtime.freeMemory()) / (1024 * 1024);

}

public long used() {

return usedSoFar;

}

}

Then we can create an instance of it in the create method and then display it

from the render method

public void create() {

...

memory = new Memory();

}

@Override

public void render () {

int fps = Gdx.graphics.getFramesPerSecond();

memory.calculateMemory();

ScreenUtils.clear(1, 0, 0, 1);

batch.begin();

batch.draw(img, 0, 0);

batch.draw(sprite, 320, 240);

font.draw(batch, ""+ fps, 500f, 460f);

font.draw(batch, memory.used() + "MB", 500f, 440f);

batch.end();

}

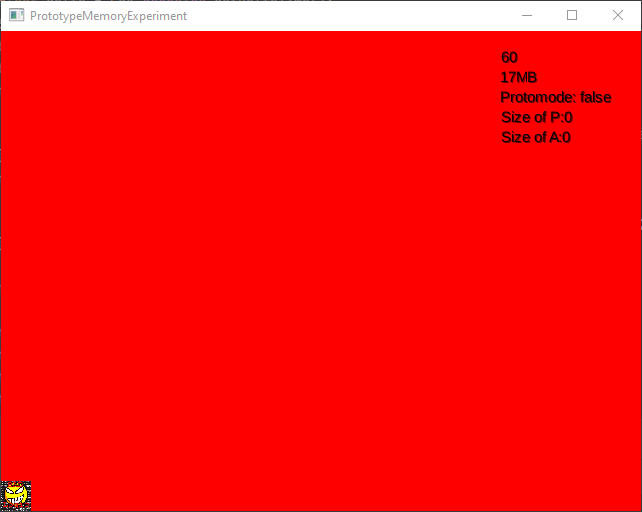

17MB seems like a lot for what we're doing, but this particular screenshot is when I was running the app from my IntelliJ IDE, so there's probably overhead there. Anyway. Let's get to the real meat of our equivalent of what Tim was talking about. First, our class that won't be using a prototype will have a location as well as some basic data like name and speed.

package spaces.peetseater.experiment;

import com.badlogic.gdx.math.MathUtils;

public class NotUsingPrototypeCircle {

private float x;

private float y;

private final String name;

private final float speed;

public NotUsingPrototypeCircle(float x, float y, String name, float speed) {

this.x = x;

this.y = y;

this.name = name;

this.speed = speed;

}

public void update(float delta) {

float dx = MathUtils.random(-2, 2);

float dy = MathUtils.random(-2, 2);

this.x += dx * speed * delta;

this.y += dy * speed * delta;

}

... getters ...

}

And to make things stupidly simple, let's just use one of these as the prototype for our "optimized" object.

package spaces.peetseater.experiment;

import com.badlogic.gdx.math.MathUtils;

public class UsingPrototypeCircle {

private final NotUsingPrototypeCircle prototype;

private float x;

private float y;

public UsingPrototypeCircle(float x, float y, NotUsingPrototypeCircle prototypeCircle) {

this.prototype = prototypeCircle;

this.x = x;

this.y = y;

}

public void update(float delta) {

float dx = MathUtils.random(-2, 2);

float dy = MathUtils.random(-2, 2);

this.x += dx * getSpeed() * delta;

this.y += dy * getSpeed() * delta;

}

public String getName() {

return this.prototype.getName();

}

... getters ...

}

I'm not going all in on what Tim was describing. No bitfields here, I'm not going to store a list of fields rather than just having fields. But the principal of saving memory by using a prototype should still apply. So, with our objects defined we can wire up a button press to generate a whole bunch of these so that we can watch the memory usage change.

...

public class PrototypeMemoryExperiment extends ApplicationAdapter {

boolean usePrototype;

LinkedList<NotUsingPrototypeCircle> allInOnes = new LinkedList<>();

LinkedList<UsingPrototypeCircle> prototypical = new LinkedList<>();

int maxNumber = 1000000;

public void create () {

...

usePrototype = false;

Gdx.input.setInputProcessor(new InputAdapter() {

@Override

public boolean keyUp(int keycode) {

if (keycode == Input.Keys.SPACE) {

usePrototype = !usePrototype;

System.gc();

try {

Thread.sleep(1000);

} catch (InterruptedException e) {

throw new RuntimeException(e);

}

createSetOfData();

}

if (keycode == Input.Keys.UP) {

maxNumber *= 10;

}

if (keycode == Input.Keys.DOWN) {

maxNumber /= 10;

}

return super.keyUp(keycode);

}

});

}

private void createSetOfData() {

allInOnes.clear();

prototypical.clear();

if (usePrototype) {

NotUsingPrototypeCircle proto = new NotUsingPrototypeCircle(0, 0, "Ball", 2);

for (int i = 0; i < maxNumber; i++) {

float x = MathUtils.random(0, 640);

float y = MathUtils.random(0, 480);

prototypical.add(new UsingPrototypeCircle(x,y, proto));

}

} else {

for (int i = 0; i < maxNumber; i++) {

float x = MathUtils.random(0, 640);

float y = MathUtils.random(0, 480);

allInOnes.add(new NotUsingPrototypeCircle(x, y, "Ball", 2));

}

}

}

@Override

public void render () {

int fps = Gdx.graphics.getFramesPerSecond();

float delta = Gdx.graphics.getDeltaTime();

ScreenUtils.clear(1, 0, 0, 1);

batch.begin();

batch.draw(img, 0, 0, 30f, 30f);

for (NotUsingPrototypeCircle notUsingPrototypeCircle : allInOnes) {

notUsingPrototypeCircle.update(delta);

batch.draw(sprite, notUsingPrototypeCircle.getX(), notUsingPrototypeCircle.getY());

}

for (UsingPrototypeCircle usingPrototypeCircle : prototypical) {

usingPrototypeCircle.update(delta);

batch.draw(sprite, usingPrototypeCircle.getX(), usingPrototypeCircle.getY());

}

memory.calculateMemory();

font.draw(batch, ""+ fps, 500f, 460f);

font.draw(batch, memory.used() + "MB", 500f, 440f);

font.draw(batch, "Protomode: " + usePrototype, 500f, 420f);

font.draw(batch, "Size of P:" + prototypical.size(), 500, 400f);

font.draw(batch, "Size of A:" + allInOnes.size(), 500, 380f);

batch.end();

}

...

One thing about the above code that I want to point out in case you try to do something similar. The Thread.sleep

is not optional. If you want to call System.gc() for the purpose of actually triggering garbage collection, you

need to nudge the JVM to actually do it by giving it time to. Otherwise, the JVM is very smart and views your call to the

gc method as a suggestion. When I didn't have this code, the results were very very unclear. Probably because the

JVM is very very good at what it does.

So? Did it work? Does the prototype usage reduce memory? To avoid the IDE doing weird things, let's instead

run the dist task to get a simple jar and then run just that on its own so that we can get more

accurate results.

The results

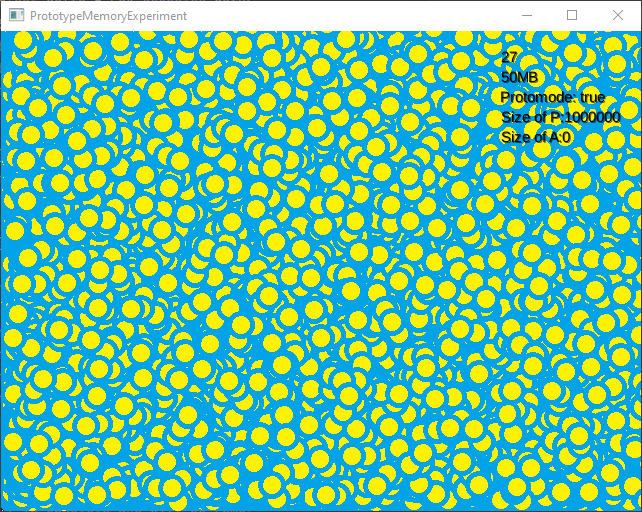

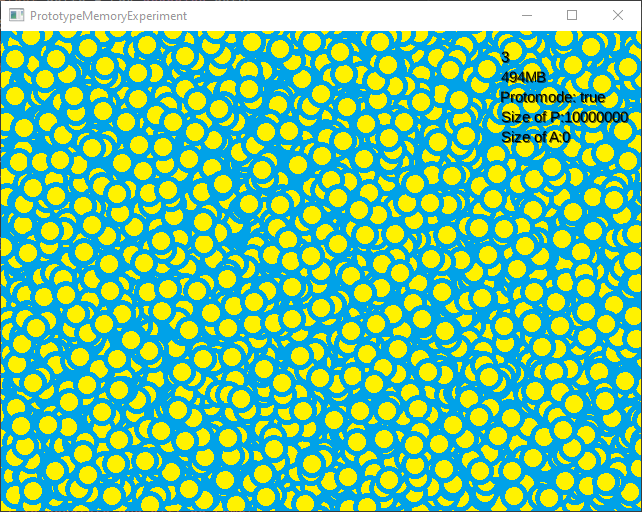

Well, to start, If I press space, we'll generate all the objects that are using the prototypes. As you can see (maybe) we're using 50MB of memory to create 1000000 of these objects and then rendering them to the screen. Our FPS dropped from 60 to 27 thanks to the volume of nonsense we're iterating over.

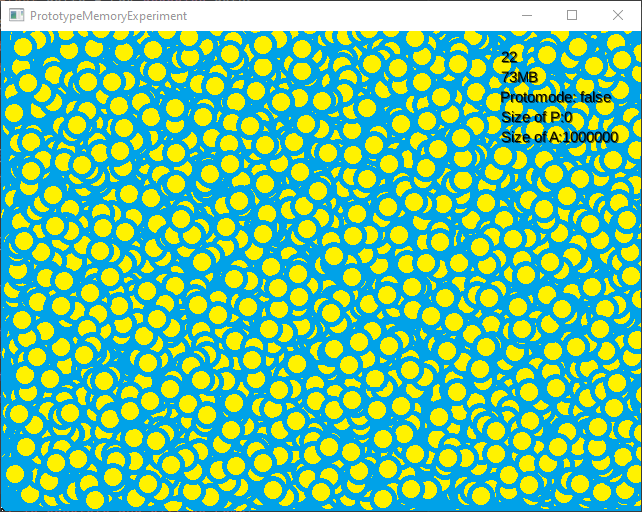

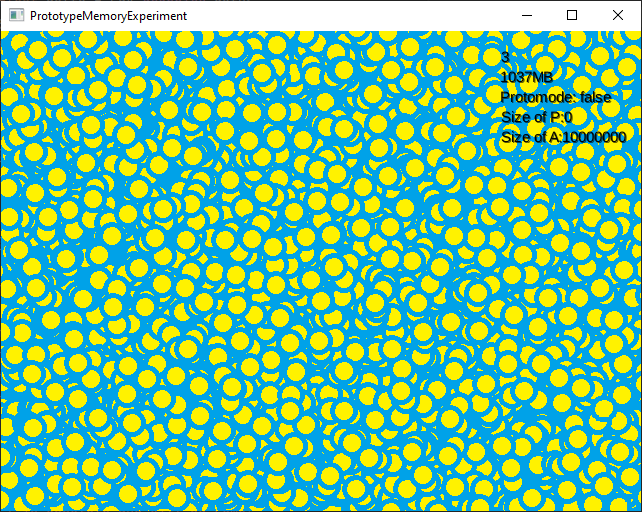

If I press space again. Then we get to see if we've managed to save memory! If so, then generating a million objects of the non-prototype instances should have higher memory. And we can see

It does! Pressing space multiple times seemed to always show the same result. The prototype-using code always had less memory:

So, unsurprisingly, having 1 object with 1 string in it is a lot more memory efficent than 1 million objects each with 1 string in it. Of course, if we used a static String then we probably wouldn't see this, but remember that we're trying to emulate what Tim was talking about in his video. And it does seem like things worked out pretty well. For fun, I pressed the up button just to see how bad the framerate got from trying to tell the system to render something 10 million times.

Pretty bad! A solid 3 frames per second. Amazing. But, as you can see, the memory usage stayed smaller in the prototype mode by a fair margin! So you can see that what Tim said in his video, about how this can really help optimize when you have a lot of objects is very true.

Addendum, some C to be true to the original idea

Since Java can't really show us pure memory manipulation, we can do this with some C code.

Since I'm on windows, I have to use cl and a development CLI window using the

tools from visual studio. It's been quite some time since I did any C (remember when I wrote

an HTTP server for a non-profit API app?) so I described the idea to Chat Gippity and

proofread some code and made some suggestions here and there. The result seems pretty good,

but I'm pretty sure there's a bug:

#include <stdio.h>

#include <stdint.h>

#include <stdlib.h>

// Define bit flags for properties

#define PROP_MAX_HP (1 << 0)

#define PROP_DAMAGE (1 << 1)

#define PROP_COST (1 << 2)

// Define the Prototype structure

typedef struct {

int max_hp;

int damage;

int cost;

} Prototype;

// Define a structure for overrides

typedef struct {

uint8_t property; // Which property is overridden

int value; // The overridden value

} Override;

// Define the GameObject structure

typedef struct {

int x, y; // Object-specific fields

Prototype *prototype; // Pointer to the shared prototype

Override *overrides; // Dynamic array of overrides

size_t override_count; // Number of overrides

} GameObject;

// Function to fetch a property value

int get_property(GameObject *obj, uint8_t property) {

// Check for override

for (size_t i = 0; i < obj->override_count; ++i) {

if (obj->overrides[i].property == property) {

return obj->overrides[i].value;

}

}

// If no override, fetch from the prototype

if (property == PROP_MAX_HP) return obj->prototype->max_hp;

if (property == PROP_DAMAGE) return obj->prototype->damage;

if (property == PROP_COST) return obj->prototype->cost;

return 0; // Default value for unknown properties

}

// Function to override a property

void set_property(GameObject *obj, uint8_t property, int value) {

// Check if the property is already overridden

for (size_t i = 0; i < obj->override_count; ++i) {

if (obj->overrides[i].property == property) {

obj->overrides[i].value = value; // Update existing override

return;

}

}

// Add a new override

obj->overrides = (Override *)realloc(obj->overrides, (obj->override_count + 1) * sizeof(Override));

obj->overrides[obj->override_count].property = property;

obj->overrides[obj->override_count].value = value;

obj->override_count++;

// Actively write to the memory to ensure it is committed

volatile int *force_commit = &obj->overrides[obj->override_count - 1].value;

*force_commit = value;

}

GameObject *create_object(Prototype *proto) {

GameObject *obj = (GameObject *)malloc(sizeof(GameObject));

obj->x = 0;

obj->y = 0;

obj->prototype = proto;

obj->overrides = NULL;

obj->override_count = 0;

return obj;

}

// Cleanup function to free dynamic memory

void free_game_object(GameObject *obj) {

free(obj->overrides);

obj->overrides = NULL;

obj->override_count = 0;

free(obj);

}

int main() {

Prototype warrior_proto = {100, 15, 50};

int baseline = get_memory_usage();

printf("Initial memory usage: %zu bytes\n", baseline);

/* Create 100 objects without overrides, we should only take up enough space for their new properties

* such as the x and y fields, but we avoid space for the hp, damage, and cost

*/

GameObject **objects = (GameObject **)malloc(100 * sizeof(GameObject *));

for (int i = 0; i < 100; i++) {

objects[i] = create_object(&warrior_proto);

}

printf("Diff in Memory after creating 100 objects: %zu bytes\n", get_memory_usage() - baseline);

/* Now we set some fields, and vary it up a bit for fun */

for (int i = 0; i < 100; i++) {

set_property(objects[i], PROP_MAX_HP, i);

if (i % 2 == 0) {

set_property(objects[i], PROP_DAMAGE, i);

}

}

printf("Diff in Memory after overriding 100 objects: %zu bytes\n", get_memory_usage() - baseline);

/* We're done, so clean up the objects and we _should_ return to the baseline amount of memory */

for (int i = 0; i < 100; i++) {

free_game_object(objects[i]);

}

free(objects);

// The memory remains elevated at 8192 bytes, I'm not sure if it's because we didn't free properly

// or if it's because windows is holding onto a memory pool. Try to compact the memory down

compact_memory();

empty_working_set();

printf("Diff Memory after cleanup: %zu bytes\n", get_memory_usage());

return 0;

}

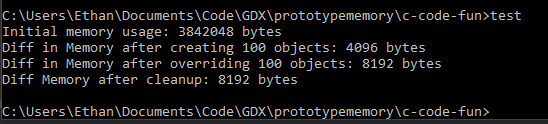

The issue seems to be a combination of how the operating system handles memory under the hood

as well as how the number of objects we're working with is small enough that it fits into one

slab of memory under the hood. The operating system doesn't want to let go of the memory it

allocated for the program, assumedly because it expects us to need it again. Initially, I

tried to use two methods, compact_memory and empty_working_set to

forcefully make the computer clean up after itself:

/* Windows stuff so we can check how much memory is in use */

#include <windows.h>

#include <psapi.h>

void compact_memory() {

HANDLE heap = GetProcessHeap();

HeapCompact(heap, 0);

}

void empty_working_set() {

HANDLE process = GetCurrentProcess();

SetProcessWorkingSetSize(process, -1, -1);

}

But this doesn't really help us measure anything because the entire layout of the memory shifts around and it becomes really hard to tell what's going on.

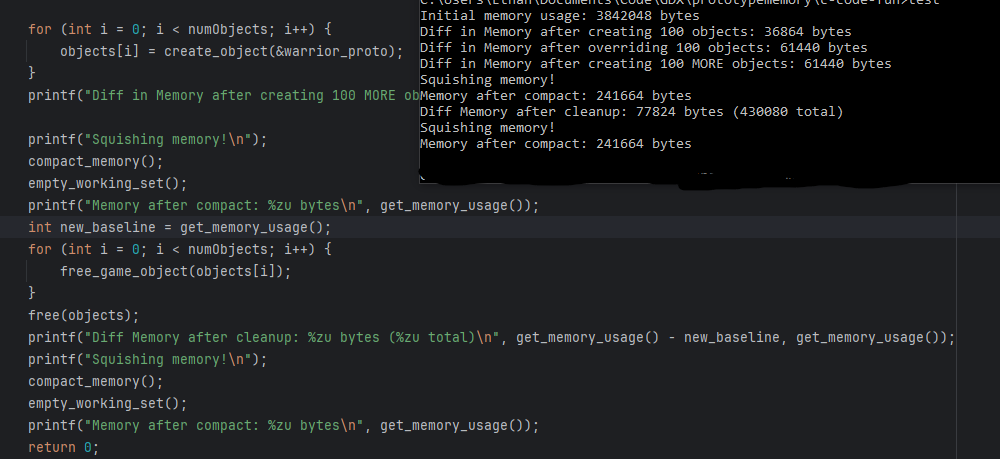

We can correct for the operating systems behavior and make it do what we want by increasing the amount of memory the program wants to use though. This was a suggestion from a discord user1 in LCOLONQ's server, and if we change the main code to this:

int main() {

Prototype warrior_proto = {100, 15, 50};

int baseline = get_memory_usage();

int numObjects = 100000;

printf("Initial memory usage: %zu bytes\n", baseline);

/* Create 100 objects without overrides, we should only take up enough space for their new properties

* such as the x and y fields, but we avoid space for the hp, damage, and cost

*/

GameObject **objects = (GameObject **)malloc(numObjects * sizeof(GameObject *));

for (int i = 0; i < numObjects; i++) {

objects[i] = create_object(&warrior_proto);

}

printf("Diff in Memory after creating %d objects: %zu bytes\n", numObjects, get_memory_usage() - baseline);

printf("Absolute memory usage: %zu bytes\n", get_memory_usage());

/* Now we set some fields, and vary it up a bit for fun */

for (int i = 0; i < numObjects; i++) {

set_property(objects[i], PROP_MAX_HP, i);

if (i % 2 == 0) set_property(objects[i], PROP_DAMAGE, i);

}

printf("Diff in Memory after overriding %d objects: %zu bytes\n", numObjects, get_memory_usage() - baseline);

printf("Absolute memory usage: %zu bytes\n", get_memory_usage());

/* We're done, so clean up the objects and we _should_ return to the baseline amount of memory */

for (int i = 0; i < numObjects; i++) {

free_game_object(objects[i]);

}

for (int i = 0; i < numObjects; i++) {

objects[i] = create_object(&warrior_proto);

}

printf("Diff in Memory after removing differences %d objects: %zu bytes\n", numObjects, get_memory_usage() - baseline);

for (int i = 0; i < numObjects; i++) {

free_game_object(objects[i]);

}

free(objects);

printf("Diff Memory after cleanup: %zu bytes\n", get_memory_usage() - baseline);

printf("Absolute memory usage: %zu bytes\n", get_memory_usage());

return 0;

}

Then we can see that the program now behaves as we expect it to. With the memory usage going up when we make a bunch of objects that differ from the prototype, and the usage goes back down after we go back to objects that are only using the prototype.

In the same way that the Java code was more memory efficient with just the prototypes, so too was the C code. It's a really neat idea that Tim shared about his Arcanum experience, and I really like listening to those ideas and thinking about the algorithms and the like. I think that I probably don't have what he described implemented exactly right, and I say that because I think based on what he said in the other video. is that that "TIG" thing he mentioned hands out handles to pointers, not pointers themselves. It'd be cool to look into how to write a memory allocator that self de-fragments, but I think that'd be the subject of another blog post.

I probably need to brush up on my C before doing that though, who knows, maybe it'll be finally be the thing that gets me to really try our rust! Until then, if you know what's going on or where I messed up in the C code above, let me know!