The plan ↩

As you can hear me blabber about whilst playing Xenoblade Chronicles Three, There's a couple different components that I was thinking would be fun to put together in order to make what's commonly referred to nowadays as a PNGtuber. Basically, rather than having a full 3d rig for a Vtuber avatar, one just animates a simple flat image instead. I'd display this along the streams as a way to avoid having to setup a camera and be uncomfortable while live.

The fun part is that this will be a combination of a bunch of different fun things I've learned over the years!

First off, we'll be looking into a plugin that caught my eye for OBS half a year ago or so. LocalVocal allows you to run a local speech synthesis model that you can use to generate text based on what you're saying in real time! I want to take this text, then perform some amount of keyword and sentiment analysis on it. Then, have an action taken to animate or swap around the image being used for an avatar on OBS.

This feels like one of those weekend projects that quickly grows to become a month long one, so I'm documenting my advances in personal knowledge here for anyone else who might be curious about this. I imagine I'll take the easy way in some places, and the hard way in others. But, let's see where we end up, yeah?

The "easy" part ↩

Well, the simplest thing of course is going to be getting the plugin installed. So let's do that first as a milestone that will keep our fire burning. To start, we need to clone the git repository for local vocal:

$ git clone git@github.com:locaal-ai/obs-localvocal.git Cloning into 'obs-localvocal'... @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ @ WARNING: REMOTE HOST IDENTIFICATION HAS CHANGED! @ @@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@ IT IS POSSIBLE THAT SOMEONE IS DOING SOMETHING NASTY! Someone could be eavesdropping on you right now (man-in-the-middle attack)! It is also possible that a host key has just been changed. The fingerprint for the RSA key sent by the remote host is SHA256:uNiVztksCsDhcc0u9e8BujQXVUpKZIDTMczCvj3tD2s. Please contact your system administrator.

Hm.

Oh right. Some intern or similar at github managed to expose the private key a while back and I never bothered to update my local machine because I could just use http connections instead. Well, I might as well do that now.

ssh-keygen -R github.com vi ~/.ssh/known_hosts # and add in the key from the blog post.

And try again!

$ git clone git@github.com:locaal-ai/obs-localvocal.git Cloning into 'obs-localvocal'... git@github.com: Permission denied (publickey). fatal: Could not read from remote repository. Please make sure you have the correct access rights and the repository exists.

...

This is supposed to be the easy part. Do I just swap to using HTTP to clone or do I fix this silly ssh configuration on my windows machine I've been to lazy to fix since at least... checks blog post date ...2023.

Jeez, 2023? Man, I am lazy. Alright let's fix this now, who knows what else we'll need to download during our adventures and I'm tired typing my github password from using HTTP after exacty one time. This should be easy, I'm pretty sure my windows configuration is just using an old key:

ssh -T git@github.com git@github.com: Permission denied (publickey).

Yup. Cool. Checking in the github settings page I've got one key in there, and it ain't this one.

cat ~/.ssh/id_rsa.pub # And add that to github... $ ssh -T git@github.com Hi EdgeCaseBerg! You've successfully authenticated, but GitHub does not provide shell access.

Amazing. Let's try this again:

$ !git clone git clone git@github.com:locaal-ai/obs-localvocal.git Cloning into 'clone'... remote: Enumerating objects: 2270, done. remote: Counting objects: 100% (876/876), done. remote: Compressing objects: 100% (327/327), done. remote: Total 2270 (delta 732), reused 549 (delta 549), pack-reused 1394 (from 2) Receiving objects: 100% (2270/2270), 71.86 MiB | 39.73 MiB/s, done. Resolving deltas: 100% (1504/1504), done.

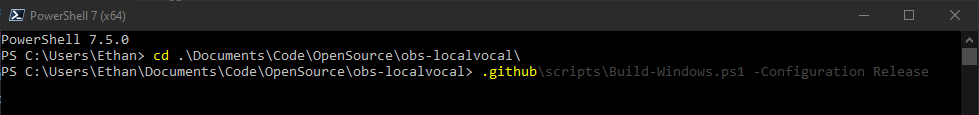

Hooray! Ok. We can finally start actually following the instructions in the readme! Under the windows section, it says I just need to run a command from the .github folder of the repository. Let's try that.

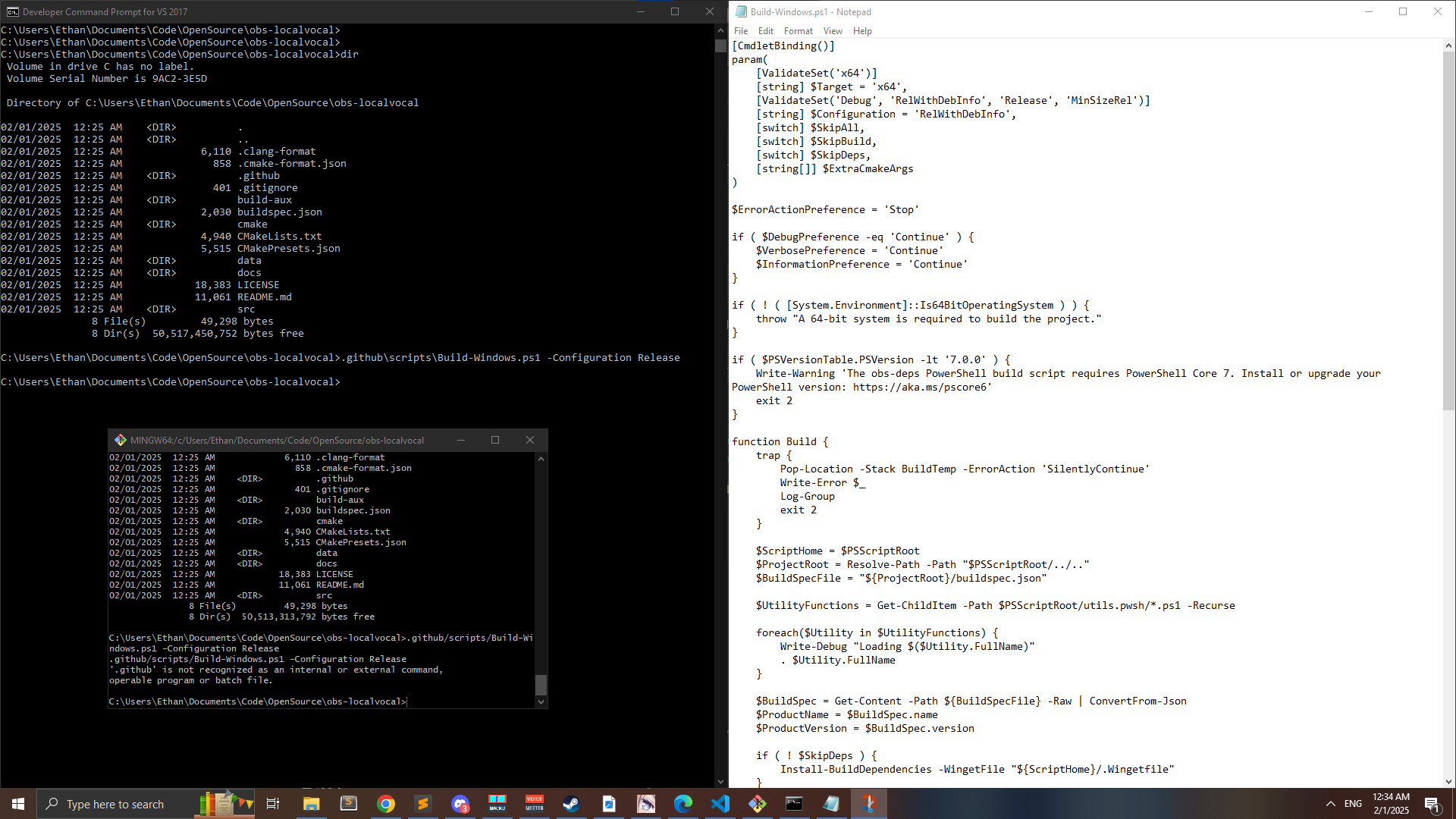

$ .github/scripts/Build-Windows.ps1 -Configuration Release .github/scripts/Build-Windows.ps1: line 1: syntax error near unexpected token `]' .github/scripts/Build-Windows.ps1: line 1: `[CmdletBinding()]'

Hm. Ah, well, it's probably expecting cmd.exe, not the git bash shell I'm using.

$ cmd > >.github/scripts/Build-Windows.ps1 -Configuration Release .github/scripts/Build-Windows.ps1 -Configuration Release '.github' is not recognized as an internal or external command, operable program or batch file.

I could have sworn this was supposed to be the easy part. Ok. If not plain old cmd.exe, then maybe I need to be using the developer tools shell that microsoft studio comes with? Last last time I did some C on windows I had to use that. so maybe to build this we need the same sort of thing?

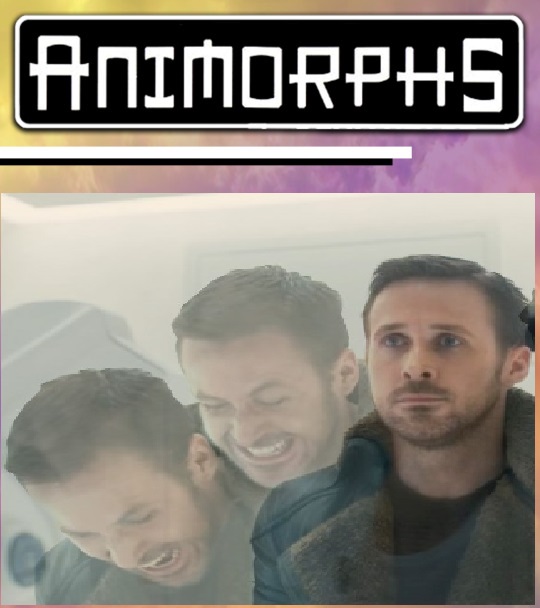

I'm starting to think God doesn't want me to install this plugin. For some strange reason, when I ran that command all it did was open the file up in notepad... but, I DO see that there's a reference to powershell in the script. So... obviously I just need to use powershell then, right?

...

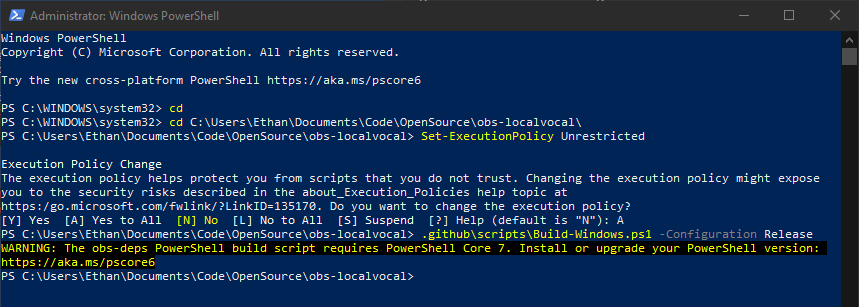

Well fine. We can fix this. We'll just run Set-ExecutionPolicy Unrestricted

It's almost comical how painful this is. Or at least I hope it is for you too,

not just for me. Here I had all this motivation and now it's being

drained from me, one microsoftcut error at a time. Luckily, at least

this error I don't have to google for.

Are. You. Kidding.

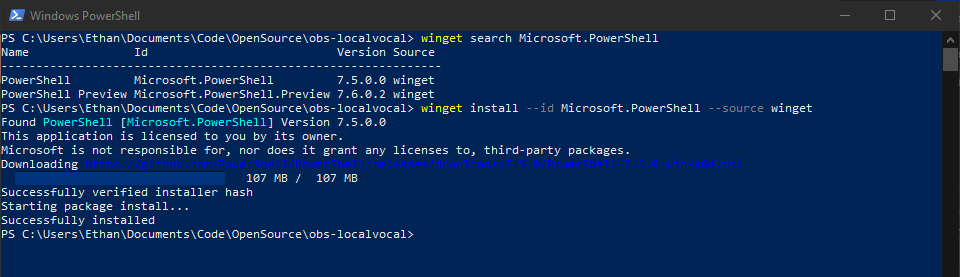

Ok. Fine. How do I upgrade powershell... this is... Ok this is actually easy now. According to this microsoft help article I just do this:

> winget search Microsoft.PowerShell > winget install --id Microsoft.PowerShell --source winget

Well look at that!

I just want to point out that when I was saving the screenshot, the screen was small enough that it linewrapped at the s in shell, leaving the location where I must be writing this from proudly displayed while I catalogued my experience. Well. Now that that's ran, then I should be able to continue right?

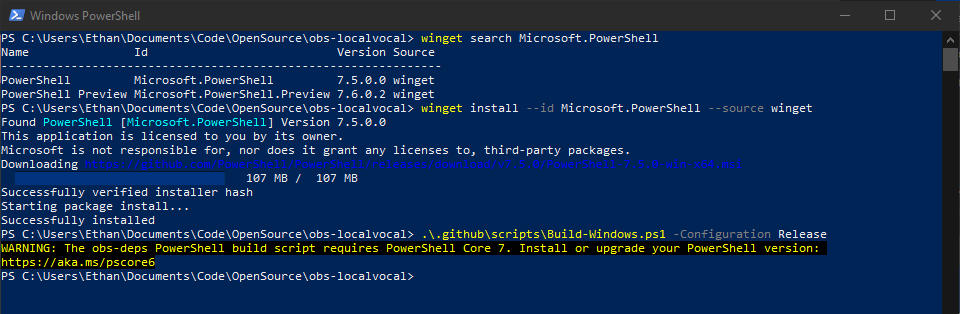

Well. I kind of assumed this would happen so I'm not surprised. Let's get a fresh shell. I shouldn't expect windows to upgrade itself in place, right?

I'm thinking my comically large amounts of screenshots 1 from the animes I watch probably have something to describe how it feels when you open a fresh shell and get the same error as before. Ah, yes.

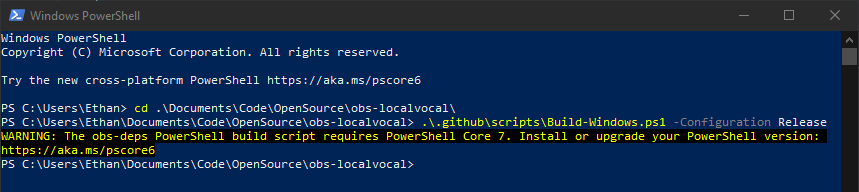

Let's just stop everything else I was doing and restart my computer. Surely that will fix the problem, right?

One restart later

Well I just realized I lost my place in the video I was watching. Dang it. I was 10 or so hours in and now I won't know where to pick up. But, on the bright side

That seems promising. And hey, powershell 7 seems to have a pretty neat auto complete based on the shell history because it's suggesting the right paths and commands to me as I type them

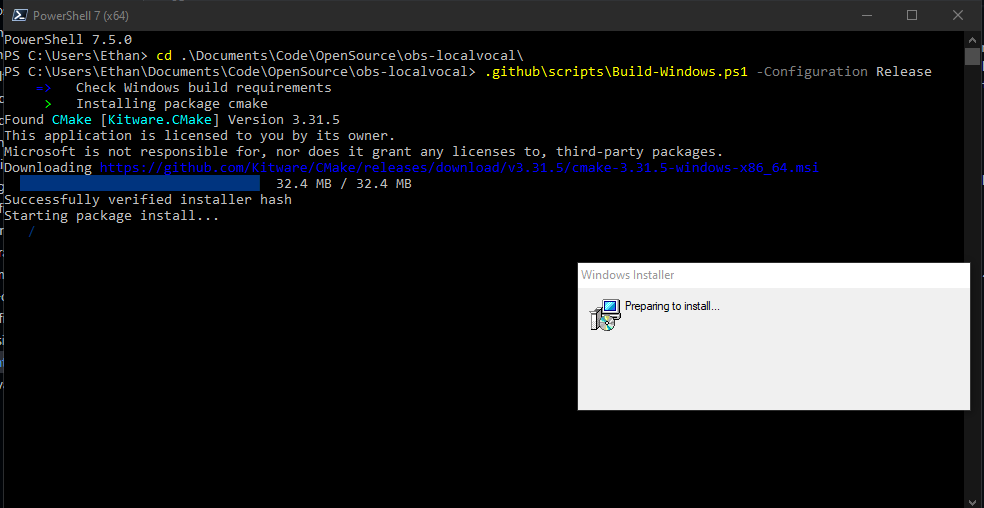

Cool. But does it work?

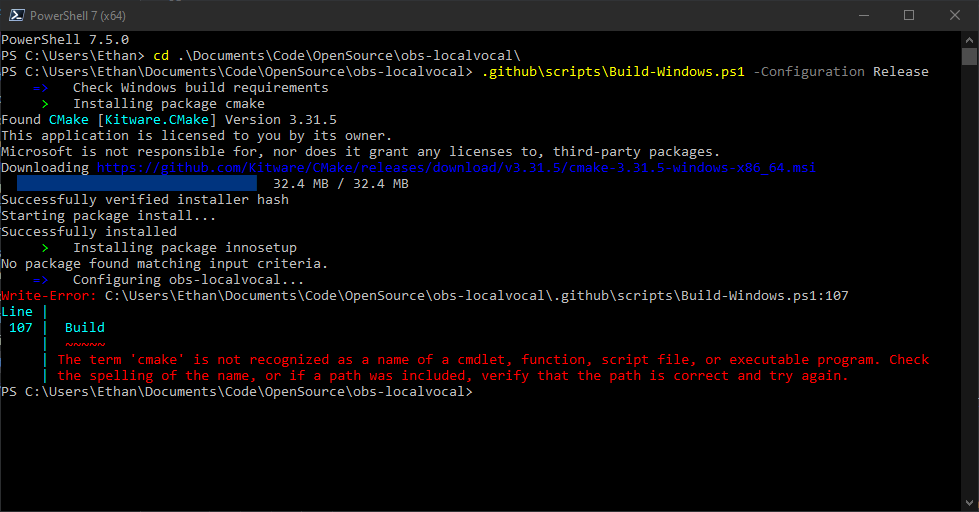

Yes! Yeeess! Yeeeeeeesssss!

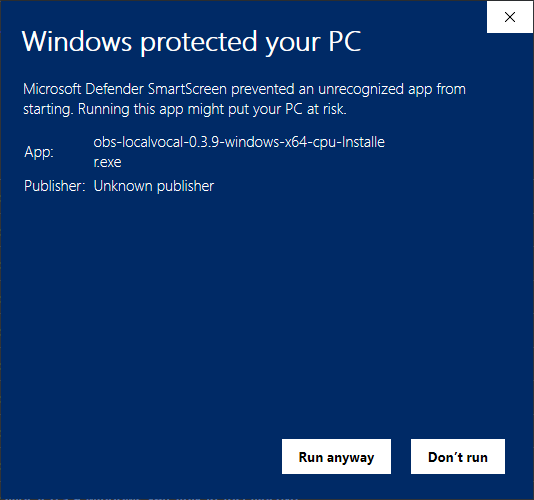

Fuck it. Let's just download the exe from the releases on github.

Hm.

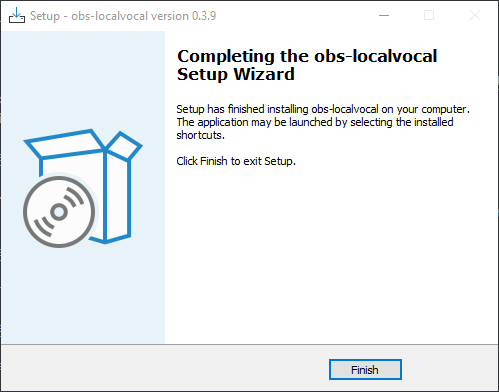

It's fine. This is a lot easier. And hey, there's a wizard! Hey! Holy crap. We're good!

But, does it actually show up in OBS now?

Ok, I guess this would have been the easy part if I had decided to install it from the built binary at the start. But, well, part of me was just kind of excited to build something opensource on windows for once. I'll live though, because now we move onto the hard part.

The hard part ↩

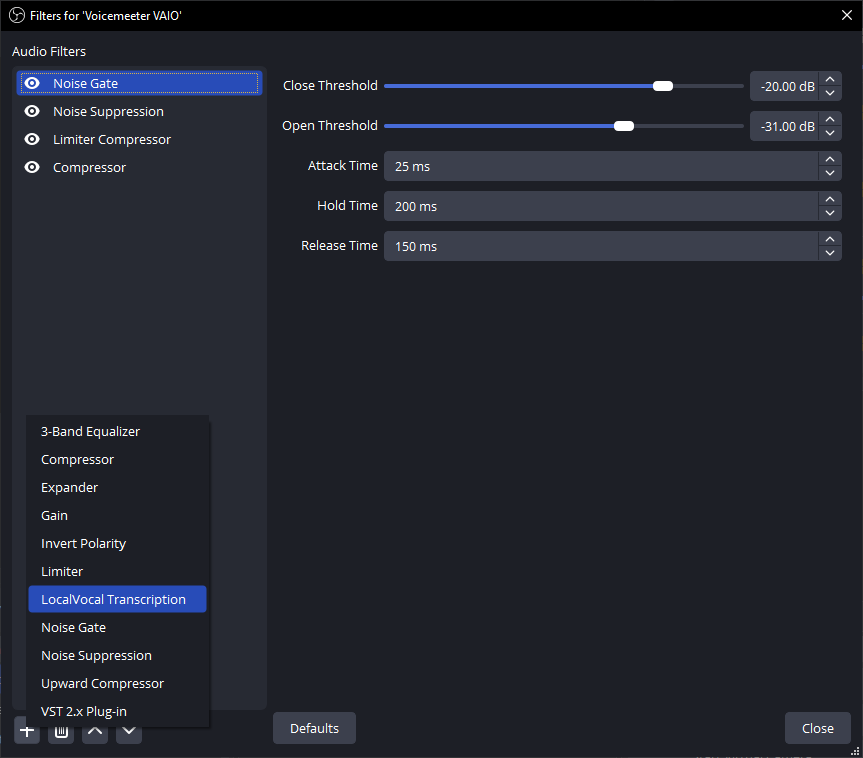

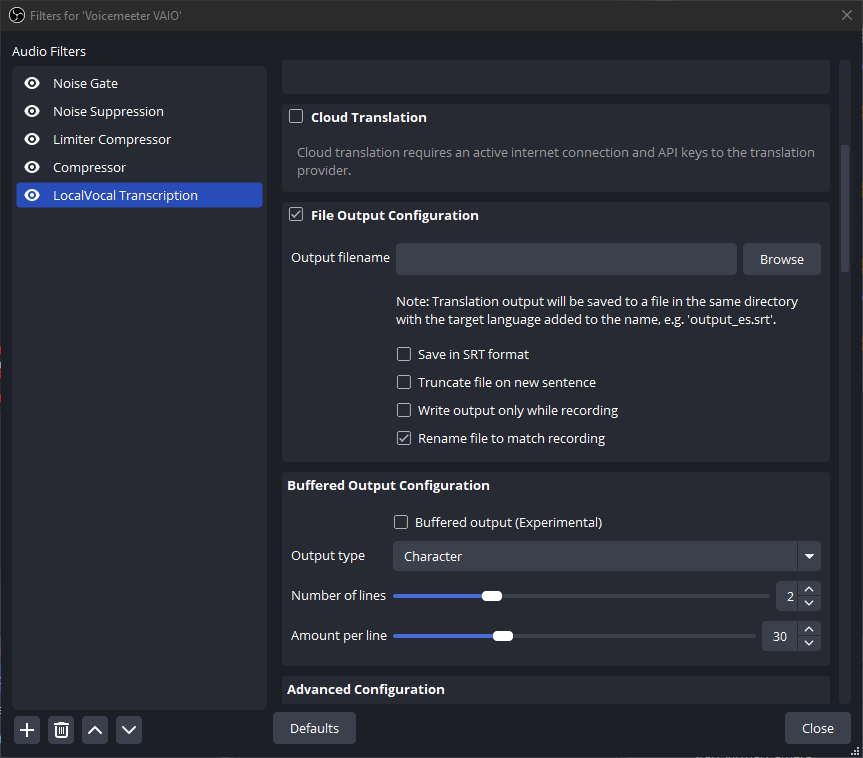

Now that we have the plugin, we need to get some data out of it. There's a whole bunch of options available once I switch the filter settings from "simple" to "advanced", one of which looks pretty promising:

On the github README two things stood out to me:

- Send captions to a .txt or .srt file (to read by external sources or video playback) with and without aggregation option

- Send captions on a RTMP stream to e.g. YouTube, Twitch

Both of these sound viable to me. The first seems like the simplest method to get the subtitles, while the second sounds like might be a more interesting challenge, assuming that we can direct the subtitle stream to a local address and setup something to listen to that instead. Since I have 0 experience with RTMP, I'm going to experiment with the file first though.

There's an option to truncate the file on every new sentence which sounds like it could be handy. But I'm also not sure if I want to keep the full context the entire time anyway so. Then there's the general question of how I'm going to listen to the input changes in the first place. So... the only real way to answer this is to get some code on the ground.

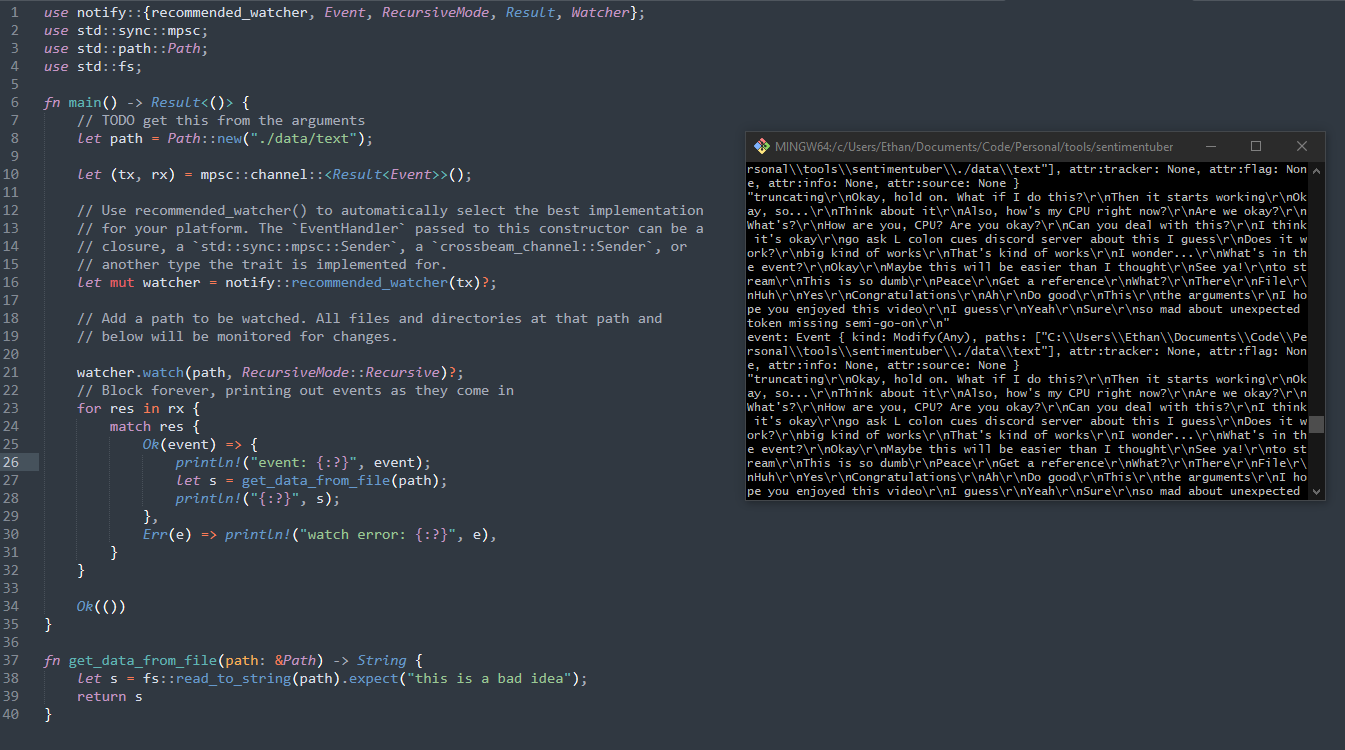

Rust makes this impressively easy with the notify library. Before finding this my thoughts were:

- Can I open the file for reading while OBS is writing to it?

- If I memory mapped the file, would it stay up to date as OBS wrote to it?

- If I tail the file, do I get an update? Could I use stdin for the program?

- Other dubious thoughts of someone who hasn't memory mapped a file in at least 8 years

And after I found the library and copied the example?

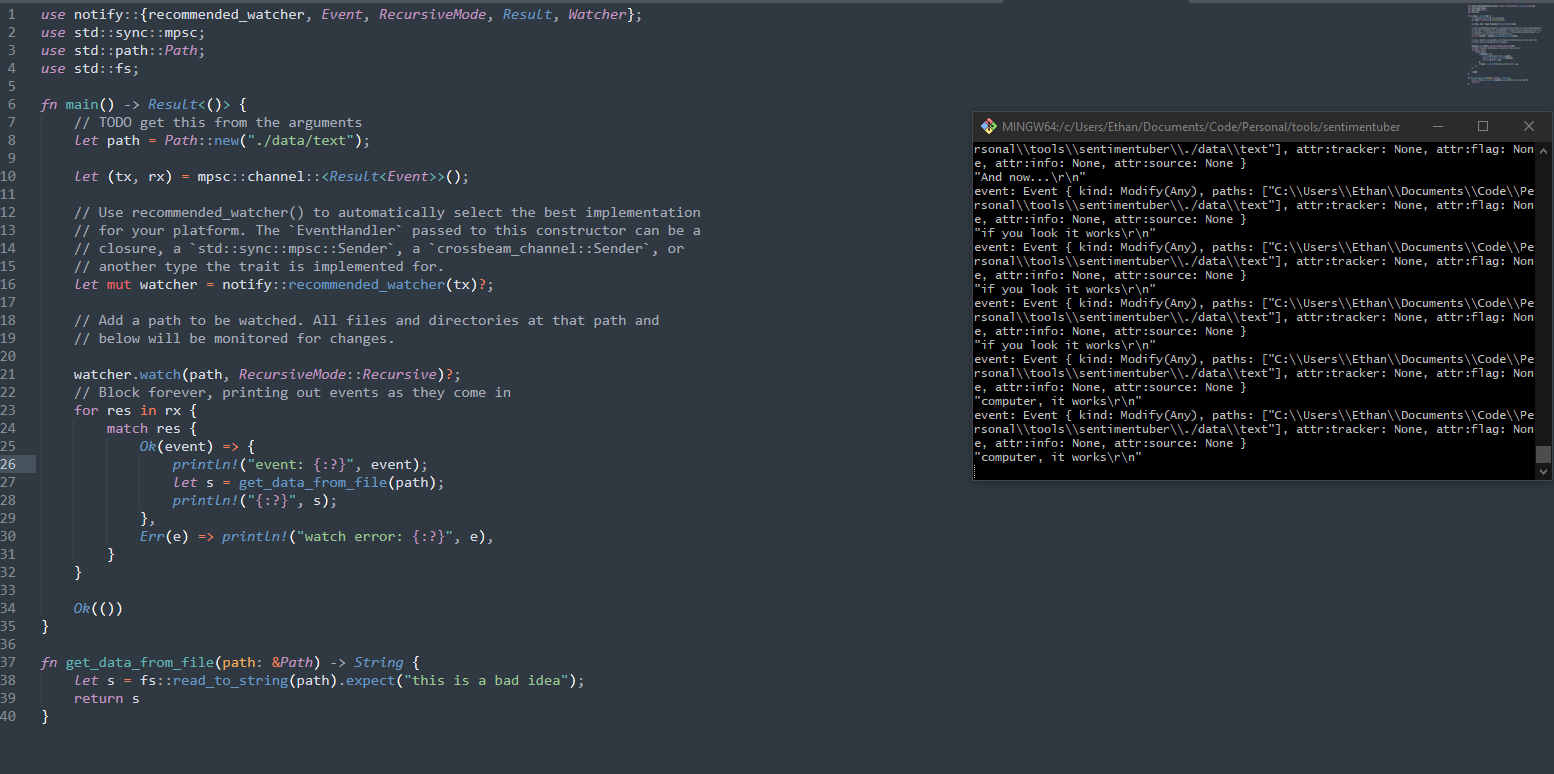

It works! Also... I should probably let OBS truncate the file so I can process each sentence one event at a time, rather than having to seek and compute the lines I haven't handled yet. Upon enabling the file truncation option in the OBS settings:

Yeah. This seems promising. And... Wait, wasn't this supposed to be the hard part? Maybe this will just be a weekend project after all!

For reference, here's the full rust code listing in the screenshot. It's literally the same thing that's in the documentation for the basic watch. But with a file read stuck into the event handler.

use notify::{recommended_watcher, Event, RecursiveMode, Result, Watcher};

use std::sync::mpsc;

use std::path::Path;

use std::fs;

fn main() -> Result<()> {

// TODO get this from the arguments

let path = Path::new("./data/text");

let (tx, rx) = mpsc::channel::<Result<Event>>();

let mut watcher = notify::recommended_watcher(tx)?;

watcher.watch(path, RecursiveMode::Recursive)?;

// Block forever, printing out events as they come in

for res in rx {

match res {

Ok(event) => {

println!("event: {:?}", event);

let s = get_data_from_file(path);

println!("{:?}", s);

},

Err(e) => println!("watch error: {:?}", e),

}

}

Ok(())

}

fn get_data_from_file(path: &Path) -> String {

let s = fs::read_to_string(path).expect("this is a bad idea");

return s

}

We'll clean this up in a bit. Let's move onto the next hard part. If we're lucky, it will be as easy as this was!

Sentiment Analysis ↩

So. What I remember in college, as I noted while playing xenoblade, was that I had implemented a Naive Bayes sentiment analysis. Doing a quick search, it's easy to find blog posts describing how to do this yourself. Which was my original plan.

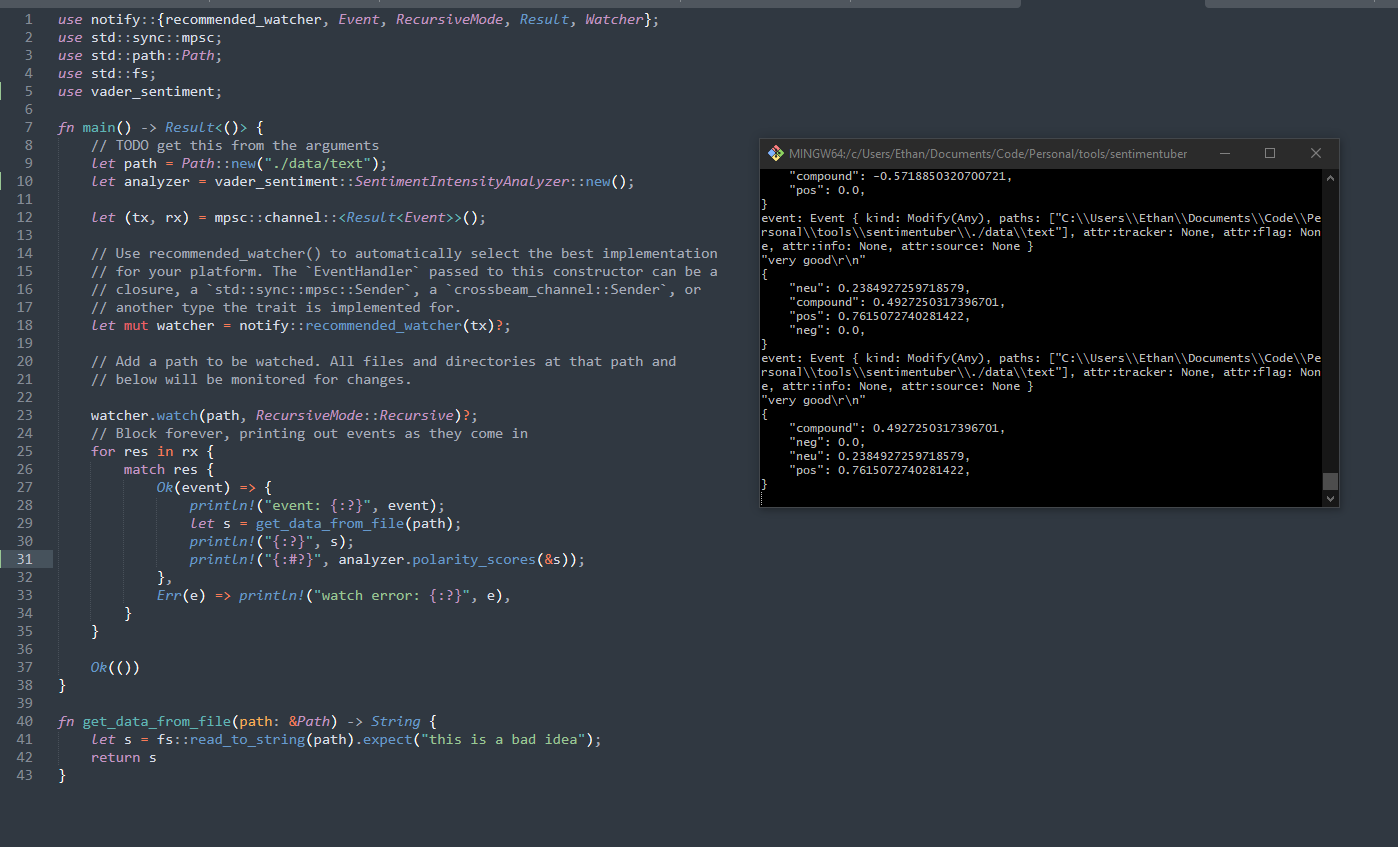

New plan. This is looking a lot like a weekend project, so let's find out if there's already some kind of sentiment analysis library that exists out there in the world.

Oh hey, look at that!

use vader_sentiment;

...

let analyzer = vader_sentiment::SentimentIntensityAnalyzer::new();

...

let s = get_data_from_file(path);

println!("{:?}", s);

println!("{:#?}", analyzer.polarity_scores(&s));

And, is it fast enough to be useful for our PNG purposes?

Certainly feels that way. The tricky part of course will be combining this with special keywords or other things that we'll want to have trigger stuff in OBS. Ah. OBS. That might be troublesome. I wrote a java integration with OBS a while ago and it was quite a bit of trial and error and using the debugger to figure things out. I suppose we'll have to see what, if any, rust bindings are available to us for communicating with OBS. If we don't have any, then there's ways to make two programs talk to each other that wouldn't be too hard. But one of the reasons I'm writing this in rust is because I don't want the overhead of the JVM on a computer I'm also streaming from.

Coming up with the rules on what values of sentiment correspond to what emotions to show would require us to declare what emotions are going to be possible. Hm. And for that, we need to figure out what states we'll be having for the avatar. The way I see it, we can figure that out now... or we could figure out how to get OBS to show an image from our rust program.

One of these involves a lot of fiddling, one of these is a point A to B problem with a clear answer and stopping point.

Yeah, let's figure out how to show an image in OBS with rust.

Interfacing with OBS ↩

Like I said before, we've figured out at least some of this stuff before. And seeing the first hit for obs rust control contain the following in the source code filled me with optimism:

/// Sets the enable state of a scene item.

#[doc(alias = "SetSceneItemEnabled")]

pub async fn set_enabled(&self, enabled: SetEnabled<'a>) -> Result<()> {

self.client.send_message(Request::SetEnabled(enabled)).await

}

And so, since it was 3:16am in the morning. I went to bed. Being awake for 22 hours is probably not the ideal time to be figuring out how to use an async library in rust for the first time ever.

Getting back to it the next day took a while, but finally, in the evening I got back to it. In my java code, displaying an image was done by fetching an existing scene item and then toggling whether or not the item was enabled:

private void toggleSceneItemOnForDuration(OBSRemoteController obsRemoteController) throws InterruptedException {

GetSceneListResponse sceneListResponse = obsRemoteController.getSceneList(1000);

String sceneName = sceneListResponse.getCurrentProgramSceneName();

GetSceneItemIdResponse sceneItemIdResponse = obsRemoteController.getSceneItemId(sceneName, getSceneItemName(), 0, 1000);

// If there's no item by that name, then just stop

if (!sceneItemIdResponse.getMessageData().getRequestStatus().getResult()) {

getLogger().warn("No item found in scene %s by name %s".formatted(sceneName, getSceneItemName()));

return;

}

Number itemId = sceneItemIdResponse.getSceneItemId();

GetSceneItemEnabledResponse s = obsRemoteController.getSceneItemEnabled(sceneName, itemId, 1000);

Boolean isEnabled = s.getSceneItemEnabled();

if (!isEnabled) {

obsRemoteController.setSceneItemEnabled(sceneName, itemId, true, 1000);

} else {

obsRemoteController.setSceneItemEnabled(sceneName, itemId, false, 1000);

obsRemoteController.setSceneItemEnabled(sceneName, itemId, true, 1000);

}

// Is there a better way to do this. Sure. Do we need to?

// No. No this is just running on my local machine. It's fine.

Thread.sleep(getMilliBeforeDisablingItem());

obsRemoteController.setSceneItemEnabled(sceneName, itemId, false, 1000);

}

So, I suppose the first thing I should do is look into how to do something similar with the rust library. Luckily for me, the example for the OBWS library has some sample code I can use:

use anyhow::Result;

use obws::Client;

#[tokio::main]

async fn main() -> Result<()> {

/// Connect to the OBS instance through obs-websocket.

let client = Client::connect("localhost", 4455, Some("password")).await?;

/// Get and print out version information of OBS and obs-websocket.

let version = client.general().version().await?;

println!("{:#?}", version);

/// Get a list of available scenes and print them out.

let scene_list = client.scenes().list().await?;

println!("{:#?}", scene_list);

Ok(())

}

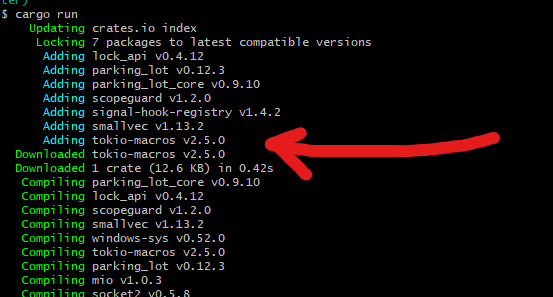

So we're using three libraries I don't have yet. Cargo makes it pretty easy to add those in:

$ cargo add tokio $ cargo add anyhow $ cargo add obws

Then I can try tossing in the code nearly verbatim and see what I get.

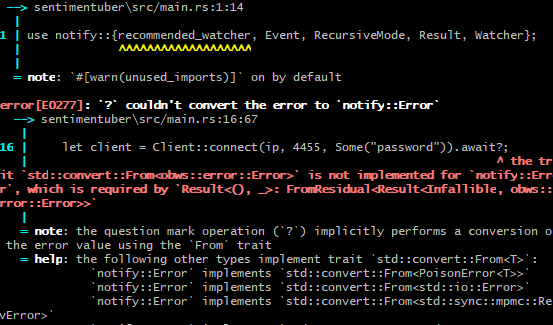

error[E0752]: `main` function is not allowed to be `async`

--> sentimentuber\src/main.rs:9:1

|

9 | async fn main() -> Result<()> {

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^ `main` function is not allowed to be `async`

Uh... Alright, now sure why that's happening, the example said to add in the macro,

so how come it doesn't work? Well, searching for tokio::main brought me

to this tutorial on using tokio

and redis which helpfully included some additional lines of information for the

features to import from tokio:

tokio = { version = "1", features = ["full"] }

Well, let's add that in and tell our tokio usage to be fully featured! It seems like

just adding the crate in doesn't let us use this macro of there's, but if we say to

give us full stuff and then run cargo run?

That seems promising! The scrolling of cargo adding things seems good... the error about the main function seems to have disappeared too, and it looks like... oh.

It's mad about the Result type being used for the main function.

Which is using the one from the notify library:

async fn main() -> Result<()>

But, the example code wants us to use anyhow. The import of both

would be ambigious, so let's see if I use anyhow instead if it will still behave...

async fn main() -> anyhow::Result<()>

And retrying the run command and

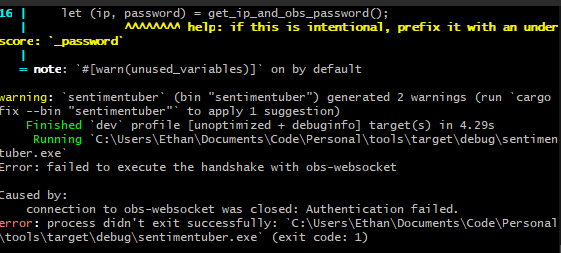

We compiled! But... we didn't connect to OBS. Because... oh, because I'm dumb.

The error message is right there " warning: unused variable: `password`", I just

forgot to take out the default "password" from the sample code. Fixing that up

real quick so the code looks like this:

use notify::{recommended_watcher, Event, RecursiveMode, Result, Watcher};

use std::sync::mpsc;

use std::path::Path;

use std::fs;

use vader_sentiment;

use obws::Client;

use tokio;

use anyhow;

#[tokio::main]

async fn main() -> anyhow::Result<()> {

// TODO get this from the arguments

let path = Path::new("./data/text");

let analyzer = vader_sentiment::SentimentIntensityAnalyzer::new();

let (ip, password) = get_ip_and_obs_password();

let client = Client::connect(ip, 4455, Some(password)).await?;

let version = client.general().version().await?;

println!("{:#?}", version);

let scene_list = client.scenes().list().await?;

println!("{:#?}", scene_list);

let (tx, rx) = mpsc::channel::<Result<Event>>();

let mut watcher = notify::recommended_watcher(tx)?;

watcher.watch(path, RecursiveMode::Recursive)?;

// Block forever, printing out events as they come in

for res in rx {

match res {

Ok(event) => {

println!("event: {:?}", event);

let s = get_data_from_file(path);

println!("{:?}", s);

println!("{:#?}", analyzer.polarity_scores(&s));

},

Err(e) => println!("watch error: {:?}", e),

}

}

Ok(())

}

fn get_data_from_file(path: &Path) -> String {

let s = fs::read_to_string(path).expect("this is a bad idea");

return s

}

fn get_ip_and_obs_password() -> (String, String) {

// TODO take this from cli like the path or something I suppose.

return (String::from("mylocalip"), String::from("mypasswordIset"));

}

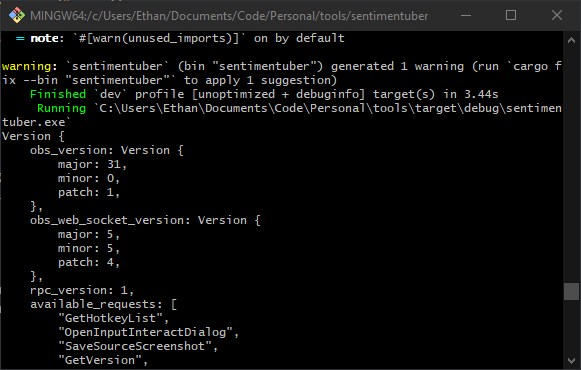

And then we can try again.

Woah! That was easy! This feels like it's going a lot faster than the first time I tried to figure out all this stuff. Probably because I did the hard part of getting the password and settings for OBS configured when I did the java project. Regardless of why. I'm hungry to try out or next step. Let's make an image show up or change or something based on the text we're transcribing when I talk into the microphone.

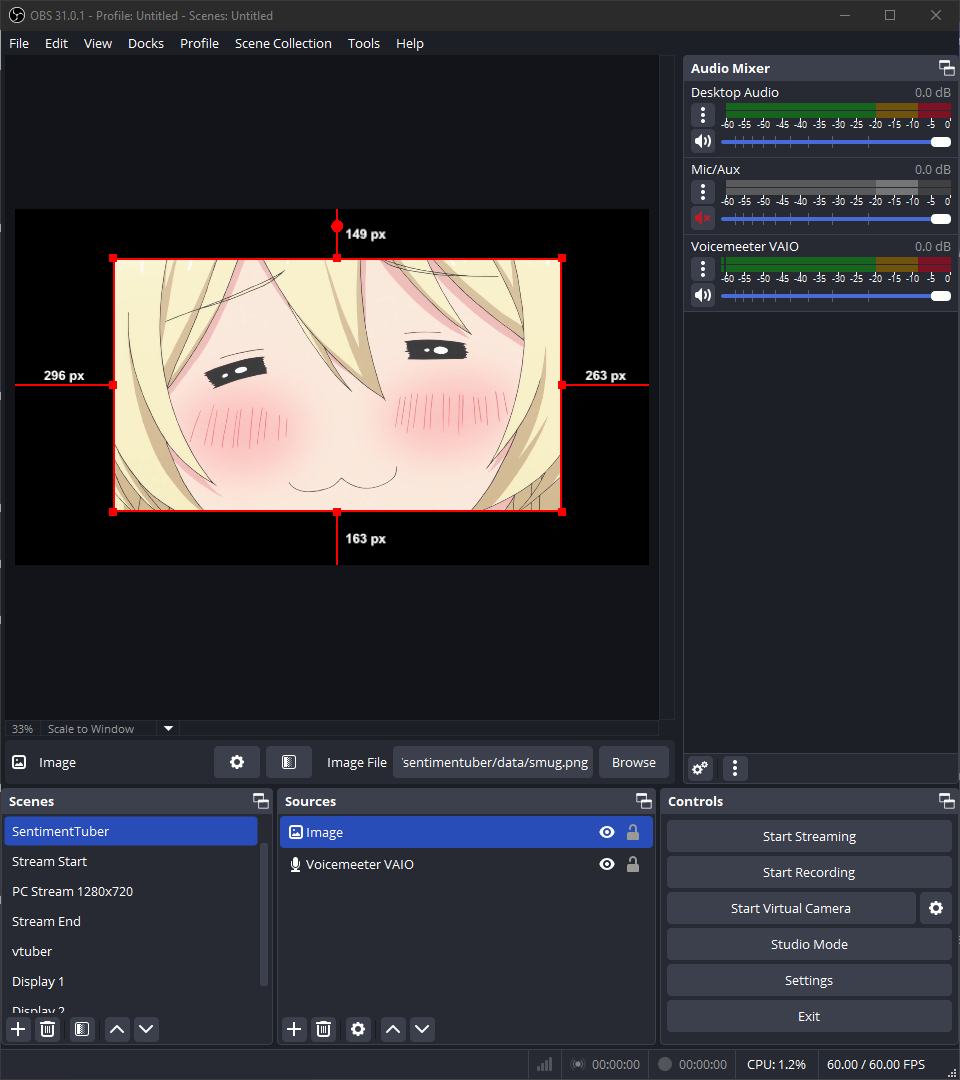

Oh wait. I suppose I don't have the whole PNGTuber thing yet at all do I. Well, just like this blog post, I've got way too many pictures of anime girls in various forms of emotion. So. Let's use some of those! I'll use some pictures of my favorite character from the lesser known booze show Takunomi 2.

So, setting up an OBS scene with my audio input and a single image to change will make for a good test environment:

And in code we can set up a mapping between emotional states and the image I want to show during that. I'll probably want to use multiple possible images in the future, but for now while we're prototyping let's keep things simple.

use std::collections::HashMap;

...

Ok(event) => {

let s = get_data_from_file(path);

// we'll do something with the score later.

let emotional_state = get_emotional_state(&s, &analyzer);

let image_to_show = state_to_image_file.get(&emotional_state).unwrap();

println!("{:?}", image_to_show);

},

...

#[derive(Debug)]

#[derive(Hash, Eq, PartialEq)]

enum EmotionalState {

Neutral,

Mad,

MakingAPromise,

Sad,

Smug,

ThumbsUp

}

fn get_emotion_to_image_map() -> HashMap<EmotionalState, &'static str> {

HashMap::from([

(EmotionalState::Neutral, "./data/neutral.png"),

(EmotionalState::Mad, "./data/mad.png"),

(EmotionalState::MakingAPromise, "./data/promise.png"),

(EmotionalState::Sad, "./data/sad.png"),

(EmotionalState::Smug, "./data/smug.png"),

(EmotionalState::ThumbsUp, "./data/thumbsup.png")

])

}

fn get_emotional_state(sentence: &String, analyzer: &vader_sentiment::SentimentIntensityAnalyzer) -> EmotionalState {

...

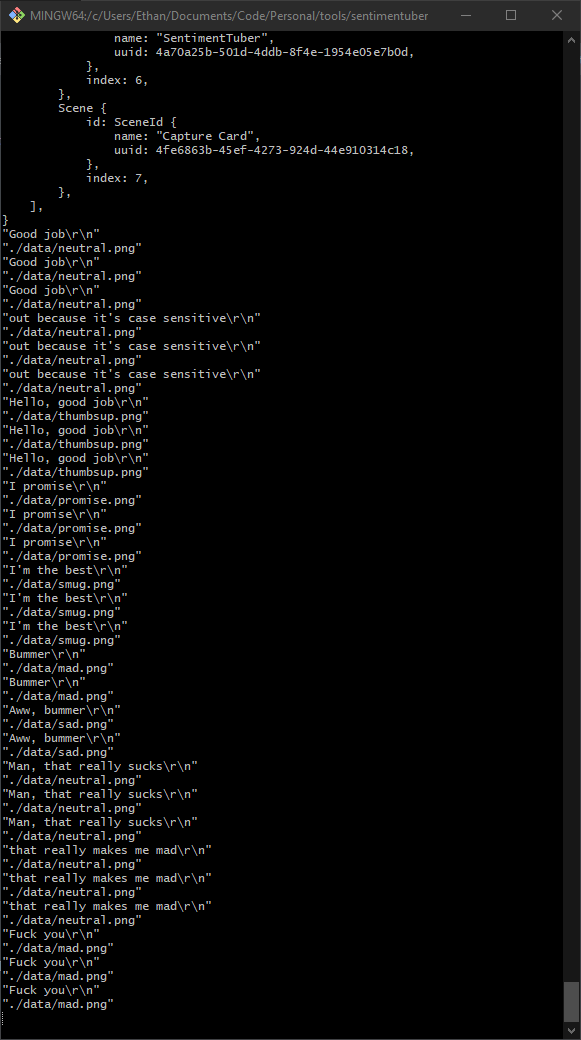

Now, besides the fact the code I'm eliding here isn't very good or robust yet, it does do the job, and when I say certain keywords or swear at the transcription, it gets the states right:

As you can see, we've got multiple events popping up at once for whatever reason and also

my get_emotional_state method isn't handling case for the keywords yet. That's

easy enough to fix but not our focus here. Let's go from just getting text to changing the image

source in OBS.

So, first off, lets get a handle onto that image scene item. Just like the java code, let's iterate the list of Scenes, find the one we care about, then find the image in its items. This will help avoid us accidently fetching a scene item from a different scene with a different name.

use obws::requests::scene_items::Source;

use obws::responses::sources::SourceId;

...

async fn get_image_scene_item(client: &Client) -> anyhow::Result<SourceId> {

let scenes_struct = client.scenes().list().await?;

let test_scene = scenes_struct.scenes.iter().find(|scene| {

scene.id.name.contains("SentimentTuber")

}).expect("Could not find OBS scene by name");

let items_in_scene = client.scene_items().list(test_scene.id.clone().into()).await?;

let image_source = items_in_scene.iter().find(|item| {

// TODO: use a better name than "Image" obviously.

item.source_name.contains("Image")

}).expect("No image source found in OBS scene for the avatar");

let source_id = client.scene_items().source(

Source {

scene: test_scene.id.clone().into(),

item_id: image_source.id.clone().into()

}

).await?;

Ok(source_id)

}

At first, I was returning the entire SceneItem rather than fetching the SourceId,

but if we want to change the settings of the input, then we'll need to send it a SetSettings

struct, which has an InputId enum in it. While we can't directly translate it via into()

we can at least use the name field to do that and not repeat the above TODO note in two places.

use obws::requests::inputs::SetSettings;

use serde_json::json;

...

async fn swap_obs_image_to(source_id: &SourceId, new_file_path: &str, client: &Client) -> anyhow::Result<()> {

let path = Path::new(new_file_path);

let absolute = Path::canonicalize(path)?;

let setting = json!({"file": absolute});

client.inputs().set_settings(SetSettings {

input: (&*source_id.name).into(),

settings: &setting,

overlay: Some(true)

}).await?;

Ok(())

}

The type for the generic T in the SetSettings struct in this case

is JSON. To be honest, I'm very thankful that I did this all in Java elsewhere first, otherwise

I'm not sure how I would figure out that the input settings are set via a JSON object. The rust

docs, while very handy to have, don't really do a good job of pointing this out to a beginner

like me. Like, yes, there's a where T: Serialize in the signature of the set_settings

method...

But I might remind you that I started learning rust in December for my first advent of code ever. And since then, I've just been picking it up and dabbling as I continue to read the rustbook in my spare time3. So, looking at the wall of text in the rust crate documentation (which I'm very grateful to have) generally leaves me looking this after a while:

Anywho. If we hook everything up, then our event handling looks something like this now:

for res in rx {

match res {

Ok(event) => {

let s = get_data_from_file(path);

// we'll do something with the score later.

let emotional_state = get_emotional_state(&s, &analyzer);

let image_to_show = state_to_image_file.get(&emotional_state).unwrap();

println!("Should show {:?}", image_to_show);

swap_obs_image_to(&image_source_id, &image_to_show, &client).await?;

},

Err(e) => println!("watch error: {:?}", e),

}

}

and running the application to test things is actually really promising! That delay that we noticed before still takes a bit, so it seems to me like I'll have to swap from full sentence parsing to letting OBS push out word by word, then aggregate them or something in the rust program if I want to make a mouth flap move while I start talking. But, at the very least, my main idea of getting a PNGTuber to respond to what I'm saying has a proof of concept working!

For now, I think that's enough for this blog post. I'm going to probably clean up the code, simplify things where I can, and also draw a "real" avatar to use for this that isn't just a bunch of screenshots I had on hand. If you liked this, feel free to let me know (via email I guess, or via twitch?) and if you're looking to implement something similar. I hope this helped you!