Identifying points of improvement ↩

There's a number of things about the code in the last post that can be improved. The hard part is deciding what to improve and work on first and where we want to take our MVP to. There's only so much time in the day, and time in the weekend, so if I want to continue our exciting little project without being tied down by work fatigue, we've got to spend our time wisely.

Now, the most obvious thing to anyone would be the fact that I'm not using any “real” avatar images and just swapping around between screenshots of a character I like. But that's also the least code related task there could be, so we can rule that out for the time being. Polishing can come later, platforming comes first.

So, let's list off a few things that are bad about our MVP code and use that to narrow our focus into bite sized chunks that we can cover in this blog post!

- We hardcoded the password, port, and host settings for OBS into the program

- The notify library is actually sending us event updates 4 times in a row for every change for some reason, this leads to us asking OBS to do unneccesary commands and just wastes CPU cycles.

- We're awaiting all over the place and my understanding of Rust's async primitives isn't very solid

- We rely on the localvocal plugin to truncate the file for us and lose contextual information

- Our emotional states are hardcoded, so each new image or feeling will require a rebuild of the program

- Everything is mixed together in one script, there's basically no testing keeping me from breaking things by accident, and its all just sort of a disorganized mess that makes me unhappy.

The good news is that none of this is particularly hard to do. The most interesting thing will likely be the contextual information and emotional state handling, but before we can get to that we need to address the debt we built up for ourselves last week. In a normal workday, none of the code on the blog last week would have seen the light of day.

But it served one important purpose. It got the motivational ball rolling! Knowing that, in a single weekend, we could get something up and working is the sort of ammunition any programmer needs to keep up with a personal project. It's easy to have an idea of something you want to do, it's a lot harder to actually start working on it. Similarly, it's easy to say man, I should really refactor that code... It's another thing to actually do it 1.

Without further blabbing, let's talk about where we're going to steer this ship:

High level design and patterns ↩

Our north star of course is that we want to make avatar images show up and react to the ongoing context that is our speech. Right now, our code looks like this:

use notify::{recommended_watcher, Event, RecursiveMode, Result, Watcher};

use std::sync::mpsc;

use std::path::Path;

use std::fs;

use vader_sentiment;

use obws::Client;

use tokio;

use anyhow;

#[tokio::main]

async fn main() -> anyhow::Result<()> {

let path = Path::new("./data/text");

let analyzer = vader_sentiment::SentimentIntensityAnalyzer::new();

let (ip, password) = get_ip_and_obs_password();

let client = Client::connect(ip, 4455, Some(password)).await?;

let version = client.general().version().await?;

println!("{:#?}", version);

let scene_list = client.scenes().list().await?;

println!("{:#?}", scene_list);

let (tx, rx) = mpsc::channel::<Result<Event>>();

let mut watcher = notify::recommended_watcher(tx)?;

watcher.watch(path, RecursiveMode::Recursive)?;

// Block forever, printing out events as they come in

for res in rx {

match res {

Ok(event) => {

let s = get_data_from_file(path);

let emotional_state = get_emotional_state(&s, &analyzer);

let image_to_show = state_to_image_file.get(&emotional_state).unwrap();

swap_obs_image_to(&image_source_id, &image_to_show, &client).await?;

},

Err(e) => println!("watch error: {:?}", e),

}

}

Ok(())

}

fn get_data_from_file(path: &Path) -> String {

let s = fs::read_to_string(path).expect("this is a bad idea");

return s

}

fn get_ip_and_obs_password() -> (String, String) {

return (String::from("mylocalip"), String::from("mypasswordIset"));

}

Every bite is spaghetti. The main method of our program handles configuration setup, client connections, event loop polling, state determinations, OBS actions, and file I/O all served up in one lukewarm microwaved tray.

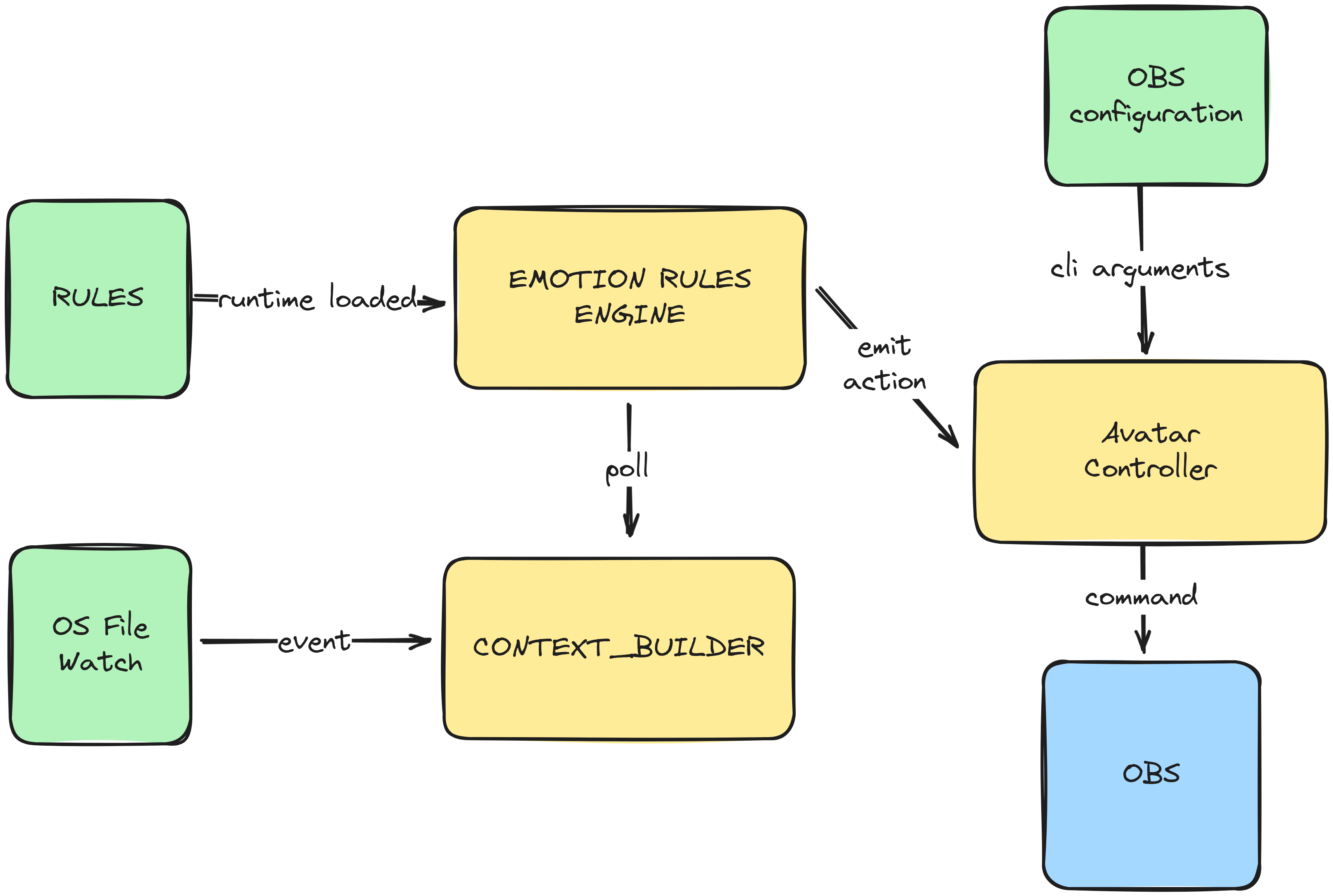

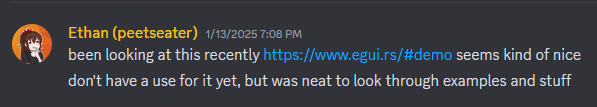

What I'd like to have by the end of this is something like this:

Broadly speaking, the simple refactorings to avoid hardcoding things will push configuration and events from the program out to the input edges (green). Formalizing some structure around what sorts of actions we can take to command OBS will result in the visible output of the program existing as its own separate module (blue). And last, the true meat of our application which we'll want to be able to battle test via unit tests and iterate on without breaking the individual parts will be handled by the engine code aka the business logic (yellow).

Just because we drew a pretty picture doesn't mean we should throw our entire codebase out, let's instead take one step at a time to build up each part bit by bit by refactoring the code.

Let's not hardcode passwords ↩

As a reminder, our current code that provides us the ability to talk to OBS is this:

let (ip, password) = get_ip_and_obs_password();

let client = Client::connect(ip, 4455, Some(password)).await?;

...

fn get_ip_and_obs_password() -> (String, String) {

// TODO take this from cli like the path or something I suppose.

return (String::from("mylocalip"), String::from("mypasswordIset"));

}

We've already got a TODO in here about taking in command line arguments. And if we wanted to,

we could

read the section in the rust book about just that and use std::env to grab

the args and do a bit of parsing and validation and whatnot.

But over the course of my short tenor with rust, I became aware of clap.

Which is a pretty cool library that does a whole bunch of useful things for us if we want make our

program behave like typical command line tools. The neat thing about clap is that there's not really

a whole lot of boilerplate to write. We define a struct that serves as the options to our program,

annotate it a little bit, and then call OurStruct::parse() to call the parser that clap

generates on our behalf.

This is actually in line with the section 12.3 of the rust book which covers modularizing your CLI arguments and parsing code. So it feels like the library isn't trying to guide us away from Rust's recommendations, but give us an easier way to do them with less effort on our part. Awesome. So, for our program we currently need to know

- The file the localvocal program is updating

- The OBS host ip, password, and port

If you were to represent this with clap, we'd get this:

use std::path::PathBuf;

use clap::Parser;

#[derive(Debug, Parser)]

#[command(version, about, long_about = None)]

pub struct Config {

/// Path to localvocal (or similar) transcript file

#[arg(long = "file", value_name = "FILE")]

pub input_text_file_path: PathBuf,

/// IP address of OBS websocket

#[arg(long = "ip")]

pub obs_ip: String,

/// Password of OBS websocket

#[arg(short = 'p', long = "password")]

pub obs_password: String,

/// Port for OBS websocket

#[arg(long = "port", default_value_t = 4455)]

pub obs_port: u16,

}

impl Config {

pub fn parse_env() -> Config {

Config::parse()

}

}

And if we call this from our main method and load the various hardcoded data then that's a straightforward change as well:

#[tokio::main]

async fn main() -> anyhow::Result<()> {

let config = Config::parse_env();

let path = config.input_text_file_path.as_path();

let ip = config.obs_ip;

let password = config.obs_password;

let port = config.obs_port;

The rest of the program can continue on as usual, and now we've got the added benefit that if I try to run the program without passing in anything I get a pleasant error and help text instead of a bunch of angry traces or custom strings I'd have to write myself:

$ ../target/debug/sentimentuber.exe error: the following required arguments were not provided: --file <FILE> --ip <OBS_IP> --password <OBS_PASSWORD> Usage: sentimentuber.exe --file <FILE> --ip <OBS_IP> --password <OBS_PASSWORD> For more information, try '--help'.

Nice. No more hard coded passwords and I want to test things by writing to a different file than

the local vocal plugin I can do that really simply. We've set a default for the port with the

annotations so I don't have to pass a port in, and the program is able to help me with the arguments

if I forget how to use it later on with the typical -h flag.

$ ../target/debug/sentimentuber.exe -h

Usage: sentimentuber.exe [OPTIONS] --file <FILE> --ip <OBS_IP> --password <OBS_PASSWORD>

Options:

--file <FILE> Path to localvocal (or similar) transcript file

--ip <OBS_IP> IP address of OBS websocket

-p, --password <OBS_PASSWORD> Password of OBS websocket

--port <OBS_PORT> Port for OBS websocket [default: 4455]

-h, --help Print help

-V, --version Print version

And all I did was define a struct! Let's move on to the next thing we wanted to tackle.

Removing duplicate events and await inside of the event loop ↩

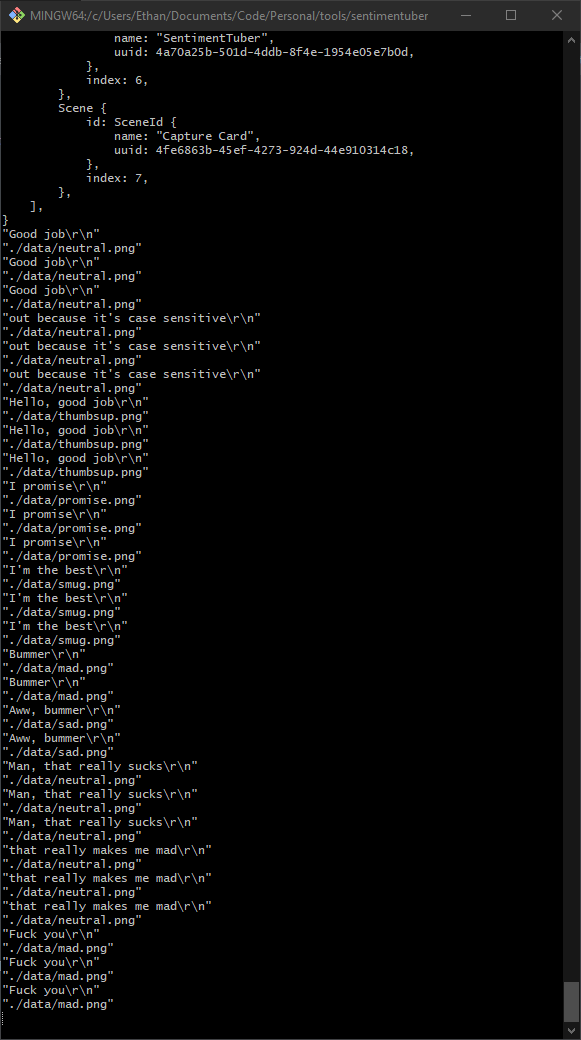

You might have noticed in the original blog post that when we were printing out the actions the program was taking it did so multiple times. If not, look at this image again:

I wasn't saying Good job 3 times in a row, it seems like we just get a few different events coming to us from the notify library and since we had a generic catch all, we did some extra work. Luckily for us, we do have ways of figuring out what's going on with file under our watch. If we look at the enum we're actually matching against we can see a few more of our options:

pub enum EventKind {

Any,

Access(AccessKind),

Create(CreateKind),

Modify(ModifyKind),

Remove(RemoveKind),

Other,

}

Of these possibilities, the Modify type are what we would expect to see coming in whenever OBS

rewrites the file as we speak. The ModifyKind

should let us go one step further in granularity:

pub enum ModifyKind {

Any,

Data(DataChange),

Metadata(MetadataKind),

Name(RenameMode),

Other,

}

So. Reading this, you'd expect us to be see events of DataChange come flying in!

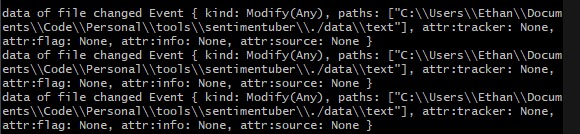

Welp. There goes that idea. For whatever reason, the kind always seems to remain Modify(Any)

This is probably platform specific, since I'm on Windows, the watcher is a ReadDirectoryChangesWatcher

and maybe that simply doesn't get enough information to tell me that Data was changed? Just that something

was.

To be honest, my guess would be that the reason I get 2 or 3 events in a row is from the file's contents being updated, and the file's access date related timestamps being changed. When I speak into the microphone and OBS changes the file I get 2 events, when I modify it directly via my text editor and press save, I get 3. Either way, I don't want that. But it's also somewhat easy to fix! From the notify documentation:

If you want debounced events (or don’t need them in-order), see notify-debouncer-mini or notify-debouncer-full.

So looking at the two libraries, I decided to go with the mini option because the full option stated that one of its default options is

Doesn't emit Modify events after a Create event

Which sounded like an extra edge case for me where we'd miss the first event if we're only watching for Modify and not Create events. But the mini option didn't point this out in the documentation, so I'm assuming that it doesn't do this. It gives us a pretty simple and straightforward interface:

let mut debouncer = new_debouncer(Duration::from_millis(50), |res: DebounceEventResult| {

match res {

Ok(events) => {

events.iter().for_each(|event| {

...

})

},

Err(e) => eprintln!("Error {:?}", e)

}

}).unwrap();

We tell it a duration to debounce events within, and then pass it a trait that can process the debounce event

result as per usual. The new_debouncer method sets us up with a recommended watcher behind the hood

which is I what I was using anyway, but if I did want to tweak the configurations then I could use new_debouncer_opt

to do that. The documentation that suggests this usage does note something important though:

// note that dropping the debouncer (as will happen here) also ends the debouncer

// thus this demo would need an endless loop to keep running

There's also a link to a full example using the opt version here and looking at that, it shows us how it sets up that endless loop. There's also an example of the plain method as well which suggests doing this to have the infinite loop:

// setup debouncer

let (tx, rx) = std::sync::mpsc::channel();

// No specific tickrate, max debounce time 1 seconds

let mut debouncer = new_debouncer(Duration::from_secs(1), tx).unwrap();

debouncer

.watcher()

.watch(Path::new("."), RecursiveMode::Recursive)

.unwrap();

// print all events, non returning

for result in rx {

match result {

Ok(events) => events

.iter()

.for_each(|event| log::info!("Event {event:?}")),

Err(error) => log::info!("Error {error:?}"),

}

}

It's interesting to me that we can pass the tx method in as the event handler. Looking

at the

implementation on foreign types listed on the event handler indicates that it implements the

Sender

struct. Or maybe that's not surprising, but it feels like there's a bit of magic going on behind the

scenes here between the channel, the debouncer, and then the actual code doing something with the

events. Most likely because I haven't finished reading the async chapter of the rust book yet.

But, I think we can use this reference code and some better2

variable names to keep moving forward on this. The other change is that rather than one event as a time, we

now need to process a list of DebouncedEvent

Which uses a different enum than what the base notify library was using, so our .kind field is

now a DebouncedEventKind

#[non_exhaustive]

pub enum DebouncedEventKind {

Any,

AnyContinuous,

}

Which is... significantly less useful that our previous event types. Though this is by design since the docs say:

Does not emit any specific event type on purpose, only distinguishes between an any event and a continuous any event.

For our purposes, I think these are effectively the same. So, lets just set it aside and write the loop to get the data from the file on whatever debounced event happens to come through to us (we've come full circle from wanting to be specific to just treating everything the same)

let (sender, receiver) = mpsc::channel();

let mut debouncer = new_debouncer(Duration::from_millis(50), sender).unwrap();

debouncer.watcher().watch(path, RecursiveMode::Recursive).unwrap();

// Block forever, printing out events as they come in

for res in receiver {

match res {

Ok(_) => {

let s = get_data_from_file(path);

let emotional_state = get_emotional_state(&s, &analyzer);

let image_to_show = state_to_image_file.get(&emotional_state).unwrap();

swap_obs_image_to(&image_source_id, &image_to_show, &client).await?;

}

Err(e) => eprintln!("watch error: {:?}", e),

}

}

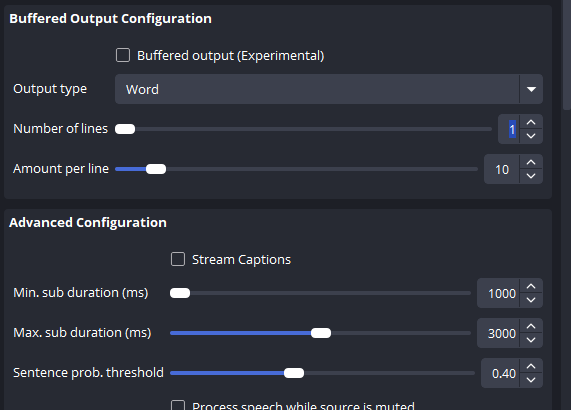

And honestly, this hasn't really changed too much from what we had before! But now if I run this and watch the output on the terminal with some prints... I only get one event each time I speak. Though, maybe more important than the debounce duration are the settings in localvocal that configure when it sends the update out to the text file:

By default it was on characters, and the number of lines was set to 2. Lowering the number of lines results in events coming from OBS to file faster, so it seems like this is a bigger impact on latency between speech and action. But... that considering we refactored the OBS ip, port, and password out from being hardcoded, we can do the same to the duration here too.

pub struct Config {

/// Milliseconds to aggregate events from the local file system

/// for changes to the transcript file

#[arg(short = 'd', long = "debounce_milliseconds", default_value_t = 50)]

pub event_debouncing_duration_ms: u64,

...

}

Before we move on to the context part though, I don't like that it feels like we're mixing

async styles together. Or at the very least, it feels wrong to await inside of

an endless loop of events. Right now we're doing this:

swap_obs_image_to(&image_source_id, &image_to_show, &client).await?;

So, we'll read the list of debounced events, then we'll block while we tell OBS to change the image, then we'd recieve the next event. This seems okay. But I can't get over the nagging feeling in my head that tokio async mixed with channel events are different from each other in some important way that I don't understand.

So. I think it's time I took a break and go read the chapter on concurrency to understand more before we make a decision on if my instinct is right, or based on trauma from Javascripts differing async models.

So I've learned some interesting fun facts about rust's async world. Here's the highlights that I think are worth pointing out:

- Rust's standard threads are raw native threads, red threads as you might call them. They're not virtual.

- mpsc stands for multiple producer single consumer

- tx and rx stand for transmitter and receiver, and much like the first time you seen i18n you wonder what people have against learning to spell

- The channel model is message passing, aka, actors, which I've used in Scala in the past with Akka

- If I want to share some data across multiple threads I'll need to deal with Atomic reference counting a mutex, aka, arc mutex.

- No where in this chapter did it cover the async keyword that that tokio code we're using references

So, it seems like there's still yet more to learn before I can really understand. At the very least, we've confirmed my suspicions are probably correct. Message passing is like actors, and async is something else. Most likely some form of green threads if I'd hazard a wild guess, but, if I go read the tokio docs...

Although Tokio is useful for many projects that need to do a lot of things simultaneously, there are also some use-cases where Tokio is not a good fit.

...

Tokio is designed for IO-bound applications where each individual task spends most of its time waiting for IO

I suppose in one way, I'm IO bound becuase I'm waiting for file events from notify, and also then waiting on OBS websocket message. So it does leave me wondering about if I should interface with OBS directly, or if instead I should consider just rendering the image in a window and having OBS capture that directly instead. I kept reading on random tokio pages on what seemed interesting or relevant and eventually found this:

Rust implements asynchronous programming using a feature called async/await

...

Although other languages implement async/await too, Rust takes a unique approach. Primarily, Rust's async operations are lazy. This results in different runtime semantics than other languages.

Well that answers that question I had. So, the Tokio usage by the OBS library is using this and if I want to continue using it then I should be prepared to mix both the "real" threads of the notify library and the green threads of the tokio runtime. There's a useful article about bridging between tokio and synchronous runtimes in the tokio docs but I'm wondering if it's worth the effort for me right now.

If only there was a simple synchronous way to display an image to be captured by OBS that I'd heard about before and been looking for an excuse to use...

Wait a minute.

Wait a minute!

Could an immediate mode rendering library be exactly what I need? Or, perhaps I should use a different toolkit I saw shared in L:Q's discord server the other day, nannou I suppose we should contrast and compare which feels the best for our use case. So let's see how much effort it takes to get an image showing with one versus the other.

Well. In egui, with the windows backend, it looks like this sample gives us an idea. So, unsurprisingly, there's a lot of boilerplate. A lot of boilerplate, and I'm getting flashbacks to when I had a DirectX book in middle school after reading a C++ for dummys book and how much code it took to get a triangle rendered on the screen.

What about with nannou?

That's a lot less code. Interestingly, there's an example of using egui with nannou! And it's still not really leaking the windows world everywhere which sort of feels nice. So. Before I dive in, let's think about which of these makes the most sense and what our options are here.

- Just keep OBS, await the async and move on

- Keep OBS, move the OBS work to its own thread and communicate with that thread via a channel or mutex

- Ditch OBS, use egui to display the image. Pass messages to the egui app to change the image as needed

- Ditch OBS, use nannou to display the image. Pass messages to the Model to change the image as needed

Now, when I started writing this blog post, and when I drew up our little diagram, I obviously wasn't thinking about ditching OBS. It does have some benefits if we do though, if we handle the drawing of the image ourselves, especially with Nannou, we've got access to easily draw on the picture itself or manipulate the texture and its mesh directly. That could let me have flexibility in doing things like mouth flaps, blinking, or other similar tricks to make a picture of a character be reactive.

But it also means getting distracted from the the motivation behind this post in the first place 3. So, let's stop waffling and start coding! First up, let's remove the magic by using the notes about bridging the sync code and async code

use tokio::runtime::Builder; let runtime = Builder::new_multi_thread() .worker_threads(1) .enable_all() .build() .unwrap();

Then, rewrite the use of async to call the block_on method

let client = runtime.block_on(Client::connect(ip, port, Some(password))).unwrap(); let image_source_id = runtime.block_on(get_image_scene_item(&client)).unwrap();

And most importantly, let's move the OBS async call out of the notify event loop, decoupling it via a new messaging channel:

let (obs_sender, obs_receiver) = mpsc::channel::<String>();

thread::spawn(move || {

for res in obs_receiver {

let image_to_show = res;

runtime.block_on(swap_obs_image_to(&image_source_id, &image_to_show, &client)).unwrap()

}

});

...

for res in receiver {

...

obs_sender.send(image_to_show.to_string()).unwrap();

...

Note that we're also spawning a separate thread for the OBS work. So we'll have our main thread blocking on and handling the events from notify, and we'll have a separate task running in the background on another thread to take in the image to show on OBS. Last, for all of this to work, we need to remove the macro from the main method because if we don't we get:

thread 'main' panicked at C:\Users\Ethan\.cargo\registry\src\index.crates.io-6f17d22bba15001f\tokio-1.43.0\src\runtime\scheduler\multi_thread\mod.rs:86:9: Cannot start a runtime from within a runtime. This happens because a function (like `block_on`) attempted to block the current thread while the thread is being used to drive asynchronous tasks.

Our little main method is 50 lines now and still feels a bit clustered together with things, but the shape of what we're doing is starting to lend itself well and bubbling up ideas on how we might pick up some pieces and abstract them away to a struct to make things a bit simpler to manage and handle.

For example, everything related to the setup of talking to OBS is something I want to abstract away.

If we did this in a way that the interface was just a generic sort of .setup and .show

method. Then I think that would easily hide away whether we're doing something with OBS or using one

of the GUI frameworks I mentioned from our main thread. Which is what I ultimately want.

Let's take this one baby step at a time though, and just move the OBS stuff to its own module and then clean up the rust code. The code I want to clean up specifically are the two obs related methods, and the setup and configuration of the client within the main method.

let ip = config.obs_ip; let password = config.obs_password; let port = config.obs_port; ... let client = runtime.block_on(Client::connect(ip, port, Some(password))).unwrap(); let image_source_id = runtime.block_on(get_image_scene_item(&client)).unwrap();

While not a huge deal, the block of 5 individual fields being pulled out into temporary variables

from the config object kind of annoys me. Contrast that with the changed source

code that simply does this:

let config = Config::parse_env(); let obs_control = OBSController::new(&config)?;

And the OBSController struct itself looks like this:

use crate::cli::Config;

use std::path::Path;

use tokio::runtime::Runtime;

use tokio::runtime::Builder;

use obws::requests::inputs::SetSettings;

use obws::requests::scene_items::Source;

use obws::responses::sources::SourceId;

use obws::Client;

use serde_json::json;

pub struct OBSController {

client: Client,

image_source_id: SourceId,

runtime: Runtime

}

impl OBSController {

pub fn new(config: &Config) -> Result<Self, obws::error::Error> {

let runtime = Builder::new_multi_thread()

.worker_threads(1)

.enable_all()

.build()

.unwrap();

let ip = config.obs_ip.clone();

let password = config.obs_password.clone();

let port = config.obs_port.clone();

let client = runtime.block_on(Client::connect(ip, port, Some(password)))?;

let image_source_name = String::from("Image"); // todo take from config

let image_source_id = runtime.block_on(get_image_scene_item(&client, &image_source_name))?;

Ok(OBSController {

client,

image_source_id,

runtime

})

}

pub fn swap_image_to(&self, new_file_path: &str) -> Result<(), obws::error::Error>{

let future = swap_obs_image_to(&self.image_source_id, new_file_path, &self.client);

self.runtime.block_on(future)?;

Ok(())

}

}

...

methods from before but with Result<SourceId, obws::error::Error> return type

Somewhere out there someone is screaming into the void about me setting up a runtime with a single worker thread inside of a struct. But, for now, I'm okay with this, we're basically writing a synchronous API for our main method to use and are going to happily hide away the fact that tokio is being used and there's anything async going on behind our two methods.

There's a bit of grossness in that the Error type for the Result is obws's error enum, but until

I make a generic trait and want to swap out OBS, this is fine since all I ever do is catch and vomit up the

error to the main method to panic and stop the program. This program's running on my machine, not some enterprise

server that needs constant up time.

Before we move on, let's update our configuration to address the TODO I left myself in the new

method. This will remove one of the two hardcoded things I have for the OBS source finding, for now I'll

leave the scene alone. We can always change the code to just fetch the current scene and avoid naming it.

pub struct Config {

...

/// Name of the image source within OBS that will be changed

#[arg(short = 's', long = "source_name", default_value = "Image")]

pub obs_source_name: String,

}

And the update to the OBS controller is as simple as let image_source_name = config.obs_source_name.clone();.

Super simple and easy. Let's move on, we can change the image in OBS to whatever string we send down the channel we setup,

so we're okay for now, I'll look into using an actual "real" window the next time I blog about this.

The rolling buffer of context ↩

This is honestly the whole motivation for this blog post. I thought it'd be real neat to have a rolling frame of text for what I'm saying out loud that can inform the avatar what to show. There's two aspects to this I think:

- A maximum context length I want to keep

- Drain that context over time

To keep this stupidly simple, I'm going to take advantage of the fact that I have the "truncate file" setting enabled for the OBS plugin, so every time it transcribes what I said to the file it overwrites the old. This means that each chunk of text comes to me on each file notify event. Great, so now what about that timing thing? Simple. Let's make tuples!

use std::time::Duration;

use std::time::Instant;

use std::collections::VecDeque;

...

let mut text_context: VecDeque<(Instant, String)> = VecDeque::new();

for res in receiver {

match res {

Ok(_) => {

let s = get_data_from_file(path);

let mut current_context = String::new();

let right_now = Instant::now();

let drop_time = right_now - Duration::from_secs(10); // TODO make configurable

text_context.push_back((right_now, s));

// TODO just pop the head of the list and check then bail if needed to save time.

text_context = text_context.into_iter().filter(|tuple| {

if tuple.0.ge(&drop_time) {

current_context.push_str(&tuple.1.clone());

}

return tuple.0.ge(&drop_time);

}).collect();

println!("{:?}", current_context);

let emotional_state = get_emotional_state(¤t_context, &analyzer);

let image_to_show = state_to_image_file.get(&emotional_state).unwrap();

obs_sender.send(image_to_show.to_string()).unwrap();

}

Err(e) => {eprintln!("watch error: {:?}", e);}

};

For the quick and dirty approach to test that this idea works, I just went with iterating over a list and filtering out anything that was more than 10 seconds old. As you can see by my TODO note, I plan on making this configurable in much the same way as the previous values we had, and there's a small optimization we could make to only have to check the head of the list up until we find something we shouldn't drop, since the list is always in order since we append to the end.

Running this program and then speaking out loud the minute and seconds I see on my clock at 5s increments shows me that the program is working pretty well:

"for 2040\r\n" "for 2040\r\nfor 2045\r\n" "for 2045\r\nfor 2050\r\n" "for 2050\r\nor 2055\r\n"

So, with the mental note to come back to my TODOs shortly, we're ready to move along to the next improvement idea!

Don't hardcode your emotions ↩

The next thing I want to tackle is this:

#[derive(Debug, Hash, Eq, PartialEq)]

enum EmotionalState {

Neutral,

Mad,

MakingAPromise,

Sad,

Smug,

ThumbsUp,

}

fn get_emotion_to_image_map() -> HashMap HashMap::from([

(EmotionalState::Neutral, "./data/neutral.png"),

(EmotionalState::Mad, "./data/mad.png"),

(EmotionalState::MakingAPromise, "./data/promise.png"),

(EmotionalState::Sad, "./data/sad.png"),

(EmotionalState::Smug, "./data/smug.png"),

(EmotionalState::ThumbsUp, "./data/thumbsup.png"),

])

}

While I like that my types are represented in the enum of what I want and what I know I have, It's not particularly fun to write code like this:

if sentence.contains("bummer") {

return EmotionalState::Sad;

}

...

let scores = analyzer.polarity_scores(&sentence);

if positive < negative && neutral < negative {

return EmotionalState::Mad;

}

Rather I'd prefer to define a rule set that tells the system whether it should be looking for a keyword, a polarity score of some negative/positive/neutral range, and a priority to apply these rules in. On top of that, I'd like to make it hot-reload but I'm not tied to getting that done within this blog post though it should honestly be as easy as setting up another file watch and mutating the rules list, but anyway. Let's talk structure! What I think would make sense, given that I already have serde from the OBS library, would be to use a simple JSON representation of a rule:

{

priority: 0,

contains_words: [ "bummer" ],

action: {

show: "./data/sad.png"

}

}

For something like, the simple trigger of a phrase or word like in the original code, this seems like it would work and be extensible for later additions for actions. The second example, around the polarity score is a bit trickier. The simplest thing would to define ranges like

{

priority: 0,

polarity: {

within_pos: [0.0, 1.0],

within_neg: [0.0, 1.0],

within_neu: [0.0, 1.0]

}

}

But this doesn't actually really let us define the same sort of thing as saying that one score must have a relation to another of lesser/greater than in the we we have now. We could maybe represent this with some optional fields that indicate whether one is less than equal, or greater than with something like

{

priority: 0,

polarity: {

pos_to_neg: -1 | 0 | 1

pos_to_neu: -1 | 0 | 1

neu_to_neg: -1 | 0 | 1

}

}

But then that begs the question of do you represent pos_relation_neg and

neg_relation_pos? Do you define them strictly once for each pairing and have

users represent neg_relation_pos: 1 as pos_relation_neg: -1? I'm

learning towards yes, because the alternative is an explosion of fields and extra confusion

in actually applying them and figuring out the winners for each. So, for the code we noted

before:

if positive < negative && neutral < negative {

return EmotionalState::Mad;

}

The equalvalent JSON rule would be this:

{

priority: 0,

polarity: {

pos_to_neg: -1,

neu_to_neg: -1

}

action: {

show: "./data/mad.png"

}

}

But it's still a bit annoying, because if you think about it, if I swap sentiment libraries and they don't provide all three, or a different set of sentiments, it'll mean I have to change the structure of the JSON, because the field names are baked into the names. So, let's instead loosen this up a bit and instead go with the rule definition like this:

{

"priority": 0,

"action": {

"show": "./data/mad.png"

},

"polarity": {

"left": "Positive",

"relation": "LT",

"right": "Negative"

}

}

this would give me a single enumeration to keep track of and would make the code changes a bit easier in the long run, but of course, I've lost my ability to represent more than one polarity relationship, that's easily fixable by using an array of polarities instead. In fact, if we consider the different types of relationship, then we probably want an array of conditions to use too! So, with that in mind, our full rules JSON we'd want would be a ray of this action + condition rules:

[

{

"priority": 0,

"action": {

"show": "./data/mad.png"

},

"condition": {

"polarity_relations": [

{

"left": "Positive",

"relation": "LT",

"right": "Negative"

}

]

}

}

]

And any sort of condition, whether it's a word search, a polarity range, or a polarity relationship can be represented in this form for us to use and tweak! We'll define the real code in a moment, but first we have one more field to address.

We just need to decide if priority is like golf or like soccer. Do we win high or low? I'm somewhat used to thinking of priority in terms of p0, p1, p2, and the like in regards to stuff like backlogs, business needs, and the like, where it's like golf with lower numbers implying urgency. So, 0 means you want something done now. Though it's just as valid to consider thinking of something with 0 priority as having no priority at all, and something with a literal high priority being a higher priority than one with a lower number. Anyway, let's roll with the one that matches English and say that the higher the number, the higher the priority.

Now it's time to learn how to use serde. I read through

the documentation and it seems pretty straightforward. By using the macros to derive both

Serialize and Deserialize we can call serde_json::from_str

to convert raw string data into strongly typed structs. So, let's whip up a few rust types for

our two types of rules, and I think I might consider adding in the range-type things we saw before

because that seems like it will be useful in the long run.

use serde::{Deserialize, Serialize};

use std::error::Error;

use std::fs::File;

use std::io::BufReader;

use std::path::PathBuf;

#[derive(Debug, Serialize, Deserialize, Clone)]

pub struct SentimentAction {

pub show: String

}

#[derive(Debug, Serialize, Deserialize)]

pub enum SentimentField {

Positive,

Negative,

Neutral

}

/// Expresses a condition that the given sentiment field will be within the range (inclusive)

#[derive(Debug, Serialize, Deserialize)]

pub struct PolarityRange {

pub low: f64,

pub high: f64,

pub field: SentimentField

}

#[derive(Debug, Serialize, Deserialize)]

pub enum Relation {

/// Greater than

GT,

/// Less than

LT,

/// Equal to

EQ

}

#[derive(Debug, Serialize, Deserialize)]

pub struct PolarityRelation {

pub relation: Relation,

pub left: SentimentField,

pub right: SentimentField

}

#[derive(Debug, Serialize, Deserialize)]

pub struct SentimentCondition {

pub contains_words: Option<Vec<String>>,

pub polarity_ranges: Option<Vec<PolarityRange>>,

pub polarity_relations: Option<Vec<PolarityRelation>>

}

impl SentimentCondition {

pub fn is_empty(&self) -> bool {

self.contains_words.is_none() &&

self.polarity_ranges.is_none() &&

self.polarity_relations.is_none()

}

}

#[derive(Debug, Serialize, Deserialize)]

pub struct SentimentRule {

pub priority: u32,

pub action: SentimentAction,

pub condition: SentimentCondition

}

pub fn load_from_file(path: &PathBuf) -> Result<Vec<SentimentRule>, Box<dyn Error>> {

let file = File::open(path)?;

let reader = BufReader::new(file);

let parsed: Vec<SentimentRule> = serde_json::from_reader(reader)?;

let mut valid_rules: Vec<SentimentRule> = parsed.into_iter().filter(|unvalidated_rule| {

!unvalidated_rule.condition.is_empty()

}).collect();

valid_rules.sort_by_key(|rule| rule.priority);

valid_rules.reverse();

Ok(valid_rules)

}

That's a big block of code, but it's honestly mostly boilerplate. We've defined a number of structs, and I've opted to make the condition a single struct rather than an enum type for what I'd refer to as the 3 subtypes of a condition. Primarily I'm doing this because I'm assuming, that like most json libraries, it's going to need some custom code to get things working if I do that, some sort of tag or serializer to inspect the fields and choose the correct type to deserialize as. I'm not looking to get overly deep with the serde library right now (though that could be a fun topic for another day), so I'm just using optional fields and then a helper method to check the condition really is set to something.

With said conditions set up, and a method to load the file from the string, it's time to convert the

old code for the enums and helper method to do condition checks into a generic rule processor that

will output an action. We had two helpers to do most of the work here get_emotion_to_image_map

which was a mapping from the enum to the images, and the get_emotional_state method that

actually performs the analysis.

The emotion map has basically been replaced by the rules we're loading in from the JSON file, but the enum types aren't present in that data. We could specify them, but then we'd be sacrificing the flexibility of the conditions we've made, so, let's just lose the enum types entirely. That means that our return type will change a bit as well since rather than a fixed type, we'll just return the action command we care about instead which will have a string for the path in it. So let's do that first. This

let state_to_image_file = get_emotion_to_image_map();

let emotional_state = get_emotional_state(¤t_context, &analyzer);

let image_to_show = state_to_image_file.get(&emotional_state).unwrap();

...

fn get_emotional_state(

sentence: &str,

analyzer: &vader_sentiment::SentimentIntensityAnalyzer,

) -> EmotionalState {

Becomes this:

use rules::SentimentAction;

let sentiment_action = get_emotional_state(¤t_context, &analyzer);

let image_to_show = sentiment_action.show;

...

fn get_emotional_state(

sentence: &str,

analyzer: &vader_sentiment::SentimentIntensityAnalyzer,

) -> SentimentAction {

The actual body of the get_emotional_state changes too obviously. Instead of returning the enum, we

just swap it out and basically collapse the old emotional map into actions instead. For example, the good job enum

return becomes a struct like so:

fn get_emotional_state(

sentence: &str,

analyzer: &vader_sentiment::SentimentIntensityAnalyzer,

) -> SentimentAction {

// if sentence.contains("good job") {

// return EmotionalState::ThumbsUp;

// }

if sentence.contains("good job") {

return SentimentAction {

show: "./data/thumbsup.png".to_string()

};

}

Now that we've changed what we're returning, the name is bad. So. Fixing that, I'll rename get_emotional_state

to get_action_for_sentiment and then confirm everything still works. Yup. Cool, let's move onto using the

helper we made before and then replace the various if conditions with a filtering operation instead. We've got the method

to load the rules via rules::load_from_file, though I've forgotten to sort the rules by the priority on load,

a quick valid_rules.sort_by_key(|rule| rule.priority); will fix that though, followed by a .reverse().

So! Onto applying our rules to our context and getting the action we should be taking!

fn get_action_for_sentiment(

sentence: &str,

analyzer: &vader_sentiment::SentimentIntensityAnalyzer,

current_rules: &[SentimentRule]

) -> SentimentAction {

let scores = analyzer.polarity_scores(sentence);

let positive = scores.get("pos").unwrap_or(&0.0);

let negative = scores.get("neg").unwrap_or(&0.0);

let neutral = scores.get("neu").unwrap_or(&0.0);

let maybe_action = current_rules.iter().find(|&rule| {

let condition = &rule.condition;

// fix this up so it doesn't immediately return

if let Some(words) = &condition.contains_words {

let contains_words = words.iter().any(|word| {

sentence.contains(word)

});

if contains_words {

return true;

}

}

if let Some(ranges) = &condition.polarity_ranges {

let is_in_range = ranges.iter().any(|range| {

let field = match &range.field {

SentimentField::Positive => positive,

SentimentField::Negative => negative,

SentimentField::Neutral => neutral

};

range.low <= *field && *field <= range.high

});

if is_in_range {

return true;

}

}

if let Some(relations) = &condition.polarity_relations {

let relation_is_true = relations.iter().any(|relation| {

let left = match relation.left {

SentimentField::Positive => positive,

SentimentField::Negative => negative,

SentimentField::Neutral => neutral

};

let right = match relation.right {

SentimentField::Positive => positive,

SentimentField::Negative => negative,

SentimentField::Neutral => neutral

};

match &relation.relation {

Relation::GT => left > right,

Relation::LT => left < right,

Relation::EQ => left == right,

}

});

if relation_is_true {

return true;

}

}

false

});

match maybe_action {

Some(rule_based_action) => rule_based_action.action.clone(),

None => SentimentAction {

show: "./data/neutral.png".to_string()

}

}

}

We need to refactor this, but let's break it down first. As we know, each rule basically has one condition,

so we don't need to consider the possibility of a rule wanting to have more than one satisfied for now.

So that keeps this simple because we can write a code block for each of the 3 types of rules and early return if

there's a match. Doing this in our .find code means that we'll advance forward in our list of rules

until we find the first match, which we know will be the highest priority rule since we sorted the rules ahead

of time.

That said, this method doesn't “spark joy” as it were. Namely because I feel like while I know the logic now, in a month or two, I'd have to spend time getting back up to speed on the three blocks of code in this code to understand it. I don't like that, so before we move on, let's yank out each of the closures we made into their own methods so that things are a bit more self-documenting. First, the word match:

/// Returns None if no rule defined, Some(T|F) for if there was a match otherwise

fn context_contains_words(rule: &SentimentRule, sentence: &str) -> Option<bool> {

let condition = &rule.condition;

if let Some(words) = &condition.contains_words {

let contains_words = words.iter().any(|word| {

sentence.contains(word)

});

return Some(contains_words);

}

None

}

This is pretty easy. We're just moving the closure from the any up and giving it a name. For

the polarity ranges, we'll do the same, but first I want to wrap up the polarity from the map we get from

the vader sentiment into our own model to make life simpler:

struct ContextPolarity {

positive: f64,

negative: f64,

neutral: f64

}

impl ContextPolarity {

fn for_field(&self, field: &SentimentField) -> f64 {

match field {

SentimentField::Positive => self.positive,

SentimentField::Negative => self.negative,

SentimentField::Neutral => self.neutral ,

}

}

}

And then we can use this to write up the closure:

fn context_in_polarity_range(rule: &SentimentRule, polarity: &ContextPolarity) -> Option<bool> {

let condition = &rule.condition;

if let Some(ranges) = &condition.polarity_ranges {

let is_in_range = ranges.iter().all(|range| {

let field = polarity.for_field(&range.field);

range.low <= field && field <= range.high

});

return Some(is_in_range)

}

None

}

This is a little bit nicer than it was before since the match is tucked away in the for_field method

of the ContextPolarity struct. This means we won't repeat that match when we do our next closure either

because it's been DRYed up! Also, I realized that I was using any here when I should have been using

all, so taking a bit more time to separate the code helps catch a small oversight from before. Lovely!

So, the relations helper code looks like this now that its using our struct:

fn context_has_polarity_relations(rule: &SentimentRule, polarity: &ContextPolarity) -> Option<bool> {

let condition = &rule.condition;

if let Some(relations) = &condition.polarity_relations {

let relation_is_true = relations.iter().all(|relation| {

let left = polarity.for_field(&relation.left);

let right = polarity.for_field(&relation.right);

match &relation.relation {

Relation::GT => left > right,

Relation::LT => left < right,

Relation::EQ => left == right,

}

});

return Some(relation_is_true);

}

None

}

Definitely easier to read I think, we could probably add a helper method onto the relation for the rule to return true or false based on the type and nix that match from the code, but since we only had to do this in one place, I think it's fine to leave it as is for now. Let's do one more quick refactor and then we'll fix up our rules loop to start using these handy methods!

Since we've defined ContextPolarity to wrap the result of the vader analysis, it makes sense to

me to take the vader code out of the get_action_for_sentiment method and push it out to the main

method so that we don't talk directly to that foreign library within our code, and rather work only with our

domain types we've made ourselves. So, we'll take this code that I have sitting in that method:

let scores = analyzer.polarity_scores(sentence);

let positive = scores.get("pos").unwrap_or(&0.0);

let negative = scores.get("neg").unwrap_or(&0.0);

let neutral = scores.get("neu").unwrap_or(&0.0);

let polarity = ContextPolarity {

positive: *positive,

negative: *negative,

neutral: *neutral

};

and move it upwards and wrap it with a helper method, once we do that we can call it from the main method

and tweak the get_action_for_sentiment to take in the polarity struct instead of the vadar sentiment

struct. So the top of our code now looks like this:

fn get_context_polarity(sentence: &str, analyzer: &vader_sentiment::SentimentIntensityAnalyzer) -> ContextPolarity {

let scores = analyzer.polarity_scores(sentence);

let positive = scores.get("pos").unwrap_or(&0.0);

let negative = scores.get("neg").unwrap_or(&0.0);

let neutral = scores.get("neu").unwrap_or(&0.0);

ContextPolarity {

positive: *positive,

negative: *negative,

neutral: *neutral

}

}

... in our main method

let polarity = get_context_polarity(¤t_context, &analyzer);

let sentiment_action = get_action_for_sentiment(¤t_context, &polarity, &rules);

let image_to_show = sentiment_action.show;

and then the actual method we've been refactoring is a bit simpler to read at a glance now:

fn get_action_for_sentiment(

sentence: &str,

polarity: &ContextPolarity,

current_rules: &[SentimentRule]

) -> SentimentAction {

let maybe_action = current_rules.iter().find(|&rule| {

let maybe_contextual_word_match = context_contains_words(rule, sentence);

if let Some(is_word_matched) = maybe_contextual_word_match {

if is_word_matched {

return true;

}

}

let maybe_polarity_in_range = context_in_polarity_range(rule, polarity);

if let Some(is_in_range) = maybe_polarity_in_range {

if is_in_range {

return true;

}

}

let maybe_polarity_relation_applies = context_has_polarity_relations(rule, polarity);

if let Some(relation_is_true) = maybe_polarity_relation_applies {

if relation_is_true {

return true;

}

}

false

});

match maybe_action {

Some(rule_based_action) => rule_based_action.action.clone(),

None => SentimentAction {

show: "./data/neutral.png".to_string()

}

}

}

This is a lot easier to read for me, it's simple to see that we've got three checks going on for each rule, and that we early return for each. But, I'm not satisfied yet. There's two more tweaks I want to do.

- Support multi-condition rule matching

- Push all these misc methods we just wrote out of main.rs and into rules.rs where they belong

I find shifting things around to be more bothersome than the first, so I'll tolerate our main file

being cluttered for now. Instead let's take a gander at the 3 checks. It's pretty simple to see that

all three of them return the same type: Option<true>. And if there's a Some

then that means that there was a condition being checked. This suggests a pretty simple clean up operation

to me:

fn get_action_for_sentiment(

sentence: &str,

polarity: &ContextPolarity,

current_rules: &[SentimentRule]

) -> SentimentAction {

let maybe_action = current_rules.iter().find(|&rule| {

let rule_checks = vec![

context_contains_words(rule, sentence),

context_in_polarity_range(rule, polarity),

context_has_polarity_relations(rule, polarity)

];

let applicable_checks: Vec<bool> = rule_checks

.iter()

.filter_map(|&rule_result| rule_result)

.collect();

if applicable_checks.is_empty() {

return false;

}

return applicable_checks.iter().all(|&bool| bool);

});

match maybe_action {

Some(rule_based_action) => rule_based_action.action.clone(),

None => SentimentAction {

show: "./data/neutral.png".to_string()

}

}

}

Rather than have a sequence of if statements and have to do some multi-line condition to

confirm that yes there was a rule for this, and yes it was true for each type, we

can collect the checks into a list then filter that down. If the list is empty once we

remove the Option wrapper then we hit the edge case of an empty rule. That shouldn't

happen, but let's not go trusting ourselves to not mess up. This also, more importantly, filters

out any boolean from a conditional check that the rule didn't specify. So if a rule didn't say it

only applies if certain words appear, we don't have to think about that condition at all as being

represented in our final list of booleans. So, with that list of booleans,

we can confirm that all of them are true and that means our rule applies! Note that since

.all returns true on an empty list, the edge case check is also protecting

us against the fact that we'd want a false if none of the applicable checks were passing.

To me, this is a lot easier to read than having to put a bunch of context into my head about multiple conditions and checks we're doing, even if after doing so we can state simply what the boolean logic would mean. So, this is good, and our method is easily readable, and the refactoring we did made it very simple to support multi-faceted rules too!

Great, so now let's move onto the cleanup! We've got a bunch of little helpers in the main.rs file and that's violating the way that the rust book suggests we structure our programs. It also just feels messy to me. One key observation for this next refactor that we can make is the signatures of our helper methods all have one thing in common:

fn context_contains_words(rule: &SentimentRule, sentence: &str) -> Option<bool> fn context_in_polarity_range(rule: &SentimentRule, polarity: &ContextPolarity) -> Option<bool> fn context_has_polarity_relations(rule: &SentimentRule, polarity: &ContextPolarity) -> Option<bool>

In case you're not feeling like the wolf in the picture above, the first argument to each of these is the same type.

Just a reference to the rule we're checking on. This implies to me that a better home for these would be attached

so that type, since there's not much difference between rule: &SentimentRule and &self

inside of an implementation block. Additionally, the first line of each of these methods is to just lift the condition

field up for ease of access, so again, we can see that the home they're gravitating towards is:

impl SentimentCondition {

fn context_contains_words(&self, sentence: &str) -> Option<bool> {

if let Some(words) = &self.contains_words {

let contains_words = words.iter().any(|word| {

sentence.contains(word)

});

return Some(contains_words);

}

None

}

... the others here

}

And similarly, the body of the loop itself can be also pushed inward to the rule struct:

impl SentimentRule {

pub fn applies_to(&self, sentence: &str, polarity: &ContextPolarity) -> bool {

let rule_checks = vec![

self.condition.context_contains_words(sentence),

self.condition.context_in_polarity_range(polarity),

self.condition.context_has_polarity_relations(polarity)

];

let applicable_checks: Vec<bool> = rule_checks

.iter()

.filter_map(|&rule_result| rule_result)

.collect();

if applicable_checks.is_empty() {

return false;

}

applicable_checks.iter().all(|&bool| bool)

}

}

And with that, our main.rs loses the cluttersome helper methods and its loop becomes simple as can be:

fn get_action_for_sentiment(

sentence: &str,

polarity: &ContextPolarity,

current_rules: &[SentimentRule]

) -> SentimentAction {

let maybe_action = current_rules.iter().find(|&rule| {

rule.applies_to(sentence, polarity)

});

match maybe_action {

Some(rule_based_action) => rule_based_action.action.clone(),

None => SentimentAction {

show: "./data/neutral.png".to_string()

}

}

}

At this point we've still got a couple helper methods in our main.rs file, and the loop on the file watch receiver is a bit clunkier feeling than I'd like. But at the very least, we're no longer hardcoding the emotions into enumerations and modifying the JSON file for our rules and restarting the program will apply them to our OBS image changes just like before which is pretty good progress. So, let's move on.

Sentiment Engine ↩

To finish cleaning up our main.rs function, I want to make this part of the code smaller:

let analyzer = vader_sentiment::SentimentIntensityAnalyzer::new();

let mut text_context: VecDeque<(Instant, String)> = VecDeque::new();

// Blocks forever

for res in receiver {

match res {

Ok(_) => {

let s = fs::read_to_string(path).expect("could not get text data from file shared with localvocal");

let mut current_context = String::new();

let right_now = Instant::now();

let drop_time = right_now - Duration::from_secs(10); // TODO make configurable

text_context.push_back((right_now, s));

text_context.retain(|tuple| {

if tuple.0.ge(&drop_time) {

current_context.push_str(&tuple.1.clone());

}

tuple.0.ge(&drop_time)

});

let polarity = get_context_polarity(¤t_context, &analyzer);

let sentiment_action = get_action_for_sentiment(¤t_context, &polarity, &rules);

let image_to_show = sentiment_action.show;

obs_sender.send(image_to_show.to_string()).unwrap();

}

Err(e) => {eprintln!("watch error: {:?}", e);}

};

}

I think having the file read happen in the loop is fine, but our text content buffer and the steps to get the action to send over to the OBS thread would be better off wrapped up in a little box to make life easier in the future. So, let's start by defining a struct to capture the various bits of context we've got and need. In order to keep the vader sentiment library out of our types I'm going to use a closure to get the polarity:

struct SentimentEngine<PolarityClosure>

where

PolarityClosure: Fn(&str) -> ContextPolarity

{

text_context: VecDeque<(Instant, String)>,

current_context: String,

rules: Vec<SentimentRule>,

polarity_closure: PolarityClosure

}

We'll start out with no rules, mainly because I want to declare the engine with just the closure as its starting argument and feel like it would be weird to pass in both the rules list, and the closure at the same time. It just feels funny to me. Anyway, we'll set the rules with a helper method:

impl<PolarityClosure> SentimentEngine<PolarityClosure>

where

PolarityClosure: Fn(&str) -> ContextPolarity

{

fn new(polarity_closure: PolarityClosure) -> Self {

SentimentEngine {

text_context: VecDeque::new(),

current_context: String::new(),

rules: Vec::new(),

polarity_closure

}

}

fn set_rules(&mut self, rules: Vec<SentimentRule>) {

self.rules = rules;

}

}

And we can move the rolling buffer for the current context, as well as

creating that context up into an add_context method:

fn add_context(&mut self, new_content: String) {

let right_now = Instant::now();

let drop_time = right_now - Duration::from_secs(10); // TODO make configurable

let mut current_context = String::new();

self.text_context.push_back((right_now, new_content));

self.text_context.retain(|tuple| {

if tuple.0.ge(&drop_time) {

current_context.push_str(&tuple.1.clone());

}

tuple.0.ge(&drop_time)

});

self.current_context = current_context;

}

That TODO I'll add in after we finish this refactor, since we don't need to veer off into the clap

struct while we're in SentimentEngine mode. If we handle it with another setter, like the rules,

then we'll be able to change it at runtime as desired or based on whatever rules we come up with like

dead air or whatever if we get into tracking that sort of thing. But first, let's finish this struct

off by moving the functionality of the two helpers in our main file get_action_for_sentiment

and get_context_polarity into the struct a bit:

fn get_polarity(&self) -> ContextPolarity {

(self.polarity_closure)(&self.current_context)

}

fn get_action(&self) -> SentimentAction {

let polarity = self.get_polarity();

let maybe_action = self.rules.iter().find(|&rule| {

rule.applies_to(&self.current_context, &polarity)

});

match maybe_action {

Some(rule_based_action) => rule_based_action.action.clone(),

None => SentimentAction {

show: "./data/neutral.png".to_string()

}

}

}

Then we can completely replace most of our main method's recieve block with the usage of our new engine:

let analyzer = vader_sentiment::SentimentIntensityAnalyzer::new();

let mut polarity_engine = SentimentEngine::new(|sentence| {

get_context_polarity(sentence, &analyzer)

});

polarity_engine.set_rules(rules);

...

for res in receiver {

match res {

Ok(_) => {

let s = fs::read_to_string(path).expect("could not get text data from file shared with localvocal");

polarity_engine.add_context(s);

let sentiment_action = polarity_engine.get_action();

obs_sender.send(sentiment_action.show.to_string()).unwrap();

}

Err(e) => {

eprintln!("watch error: {:?}", e);

}

};

}

And that feels a bit less messy and easy to read than before. We'll see if I agree with that by the next time I write my next blog post, but for now, this will do! The only remaining thing is to move the struct and the helper method over to its own file to get it out of the main.rs file and I'll call it a day! Doing this and tweaking the imports results in a pretty straightforward main method:

fn main() -> anyhow::Result<()> {

let config = Config::parse_env();

let rules = load_from_file(&config.rules_file).unwrap_or_else(|e| {

panic!(

"Could not load rules file [{0}] {1}",

config.rules_file.to_string_lossy(),

e

)

});

let obs_control = OBSController::new(&config)?;

let (obs_sender, obs_receiver) = mpsc::channel::<String>();

thread::spawn(move || {

for image_to_show in obs_receiver {

obs_control.swap_image_to(&image_to_show).expect("OBS failed to swap images");

}

});

let analyzer = vader_sentiment::SentimentIntensityAnalyzer::new();

let mut polarity_engine = SentimentEngine::new(|sentence| {

get_context_polarity(sentence, &analyzer)

});

polarity_engine.set_rules(rules);

let (sender, receiver) = mpsc::channel();

let debounce_milli = config.event_debouncing_duration_ms;

let mut debouncer = new_debouncer(Duration::from_millis(debounce_milli), sender).unwrap();

let path = config.input_text_file_path.as_path();

debouncer.watcher().watch(path, RecursiveMode::Recursive).unwrap();

// Blocks forever

for res in receiver {

match res {

Ok(_) => {

let s = fs::read_to_string(path).expect("could not get text data from file shared with localvocal");

polarity_engine.add_context(s);

let sentiment_action = polarity_engine.get_action();

obs_sender.send(sentiment_action.show.to_string()).unwrap();

}

Err(e) => {

eprintln!("watch error: {:?}", e);

}

};

}

Ok(())

}

Giving it another read through, I think that I might want to change the type of our channel. Rather than the plain

string, where we're sending along the string, I sort of want to send along the sentiment action type instead. This

seems like a good idea to me because, while I'm only sending along the show field right now, if we

expand this with other actions, then that type won't change and the receiving OBS code can expand to handle multiple

action requests more easily than if we had to check the string for some notion of "are you a file or not".

Lucky for us, according to the rust book:

Any type composed entirely of Send types is automatically marked as Send as well. Almost all primitive types are Send, aside from raw pointers, which we'll discuss in Chapter 20.

So we can easily swap mpsc::channel::<String>(); out for mpsc::channel::<SentimentAction>();

and then tweak the main method to fix the type errors the compiler now raises.

let obs_control = OBSController::new(&config)?;

let (obs_sender, obs_receiver) = mpsc::channel::<SentimentAction>();

thread::spawn(move || {

for image_to_show in obs_receiver {

obs_control.swap_image_to(&image_to_show.show).expect("OBS failed to swap images");

}

});

...

Ok(_) => {

let new_context = fs::read_to_string(path).expect("could not get text data from file shared with localvocal");

polarity_engine.add_context(new_context);

let sentiment_action = polarity_engine.get_action();

obs_sender.send(sentiment_action).unwrap();

}

And now I'm satisfied. Our main file is lean and describes the edges of our program and its needs in one simple shot, the business logic for the rules and their application are contained within modules, and the context we're using for what we should be doing is nestled away inside of a little engine struct that can tell us what action OBS should be doing at any time we need. Lovely.

Wrap up ↩

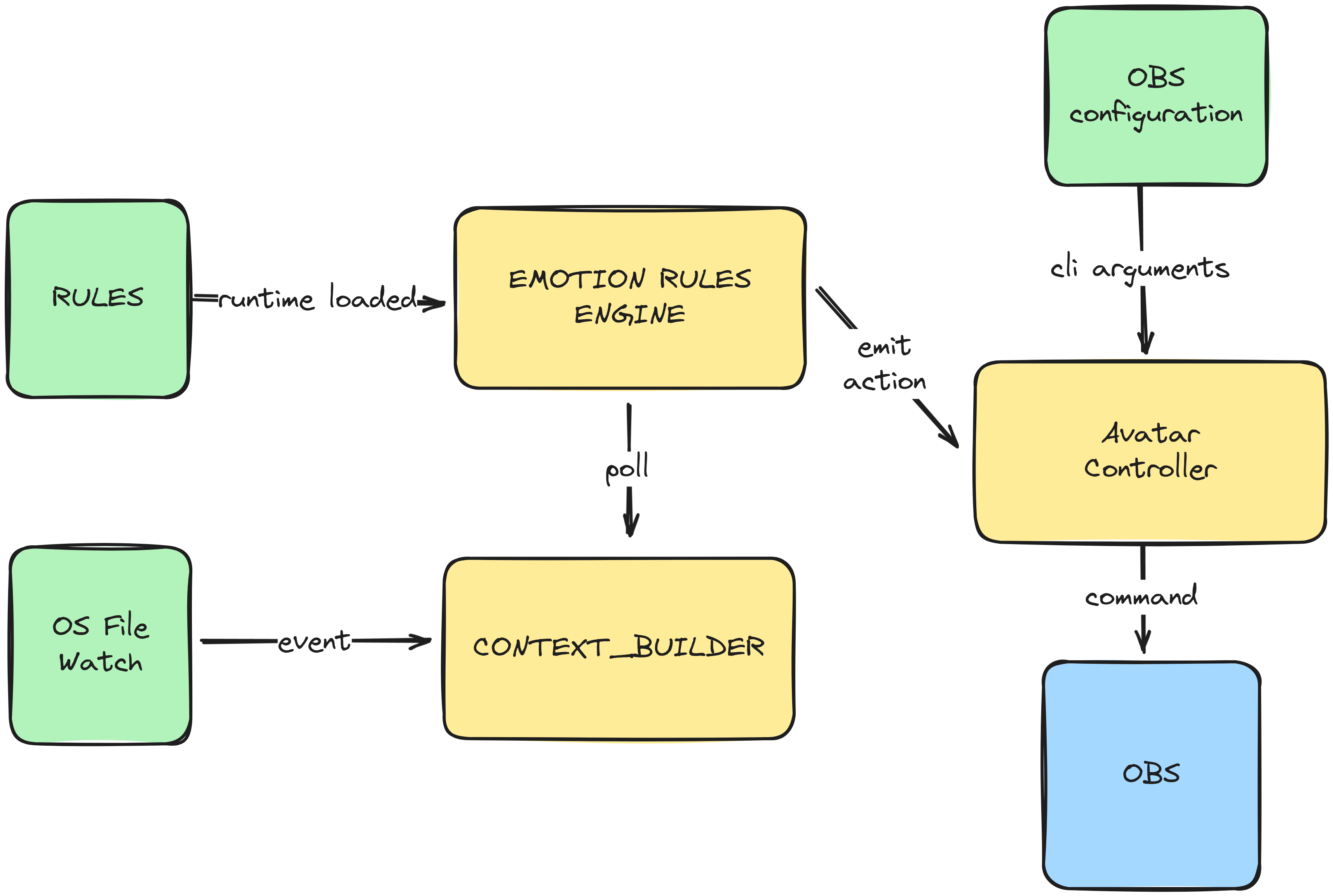

So, let's take a quick look at our diagram of what we thought we wanted to build at the start of the blog post again:

Ok, and looking at our code and drawing up a quick diagram of what we actually built:

So, a bit off from our intial vision, but still pretty close. Considering at the start of this blog post I hadn't read the chapter on channels yet, I think that getting close to the mark on this is good enough. We've got a pretty solid framework in place for us to expand on, and I think I'll probably write up a 3rd blog post in the near future for some additional features and changes I can already tell I want to do.

Specifically, I'm thinking:

- Replace OBS with a window + texture

- Introduce a timer + emit an event for idle animations such as blinking or other easter eggs

- Implement hot reloading of the rules list for faster tuning of parameters and actions

And of course, I need to actually get around to using my actual VTuber character Za-chan instead of the various Nao screenshots I've been using as placeholders. That can wait until next weekend though! For now I think it's time to go play some video games and relax for a bit.