Avatar Creation ↩

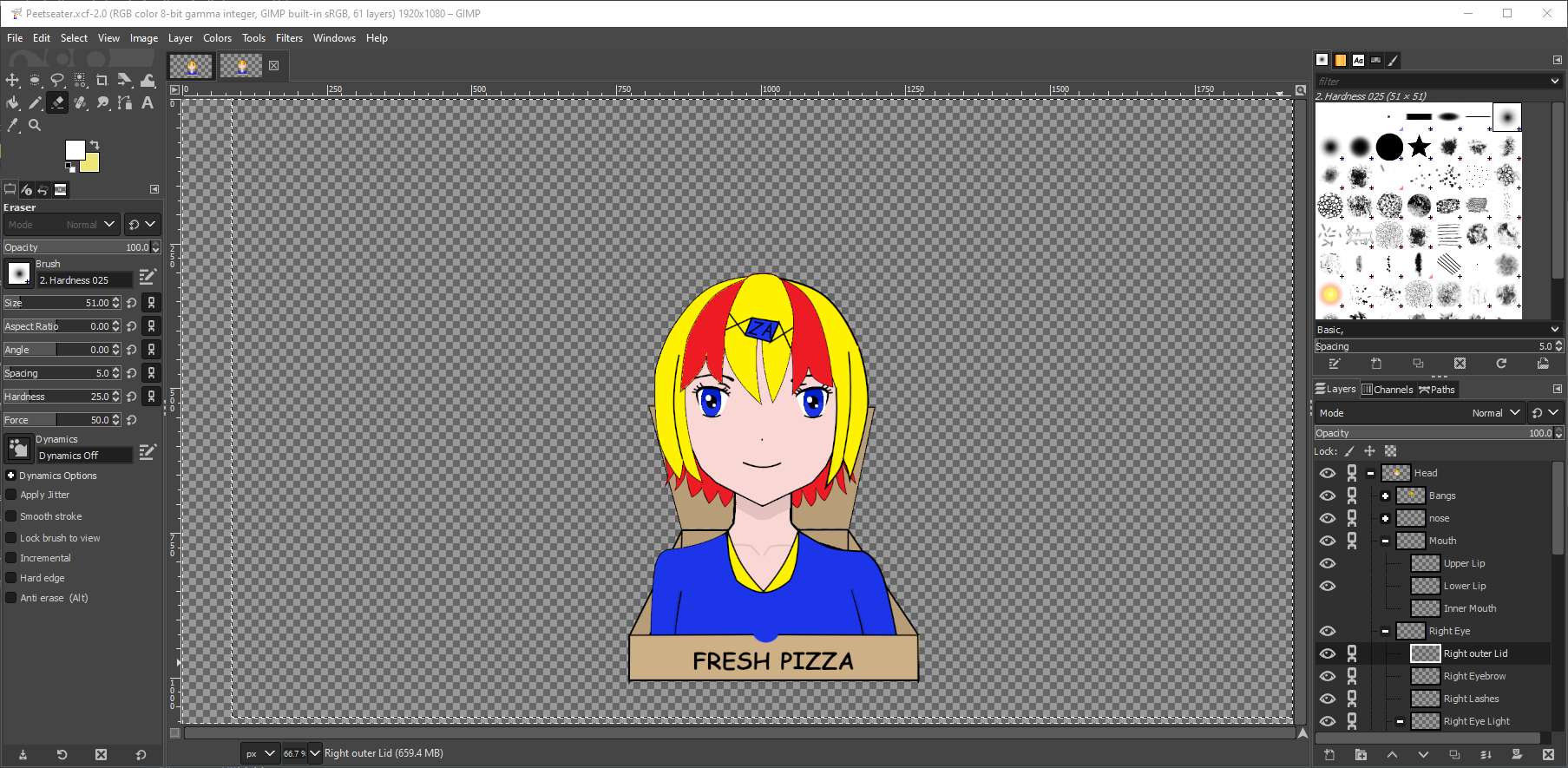

Well, time to stop procrastinating. I made my avatar before with Gimp and so I suppose I should start there again.

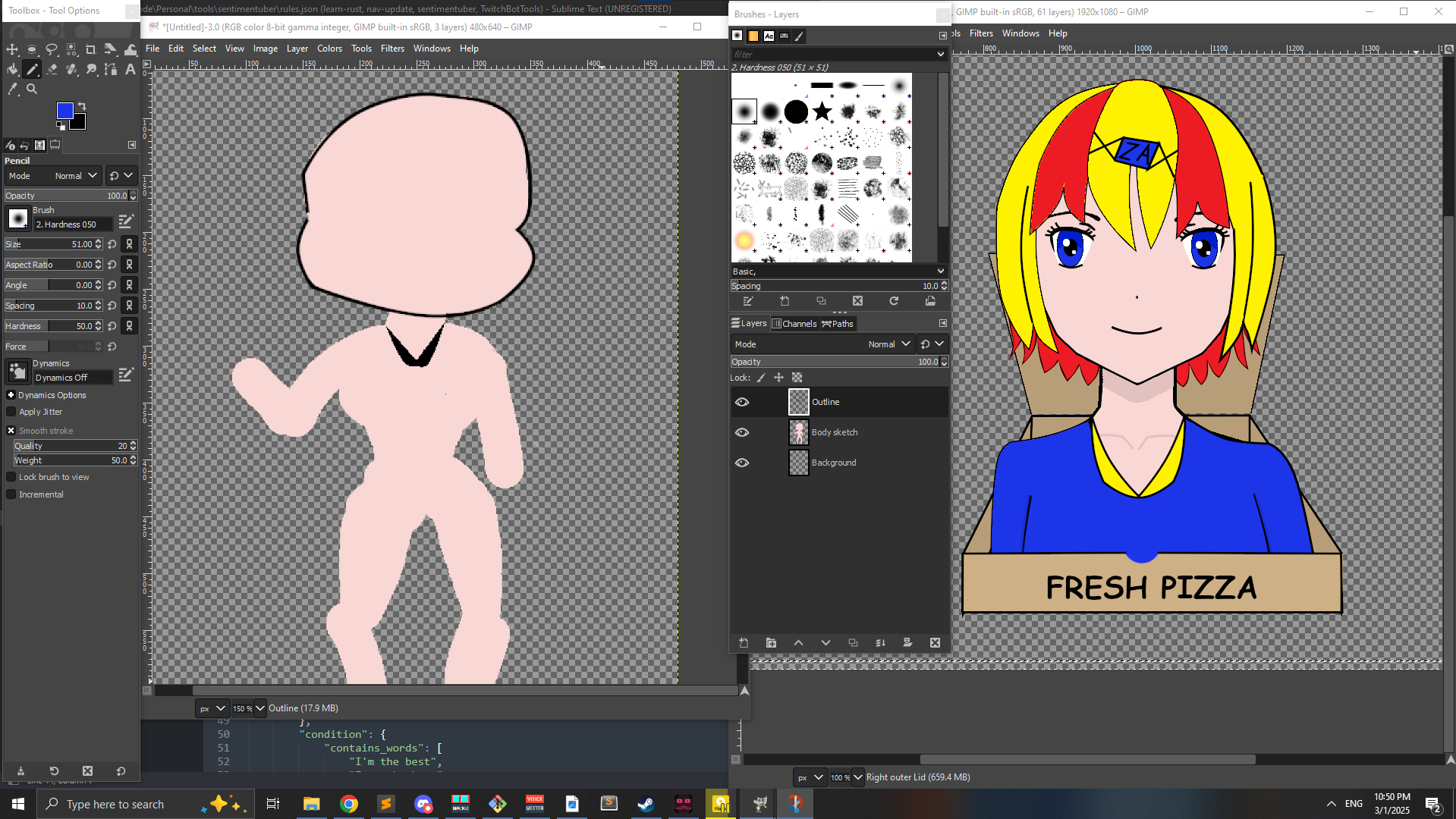

Except, maybe I don't want go full box mode again, and maybe even give Za-chan a body... but I'm not an artist. So, how about a Chibi? That should be easier than trying to make a "proper" body right? Some deformity is fine and maybe it will even be more expressive this way! Yeah that sounds good.

And a few minutes into trying to draw an outline and using the path tool later, I was googling for any sort of reference material I could find. Looking at Blue Archive chibi styles, tutorials online about how to draw chibis, the usual things one does when you've got no clue. And then, I stumbled onto charat and this seemed like a great way to start! Mainly because drawing with a mouse sucks.

An hour or so later, after looking back and forth between my Gimp window with Za-chan and the chibi editor, I decide that I'd not do any post editing after saving the chibi file down. Why? Because if I was going to alternate each file by a mouth, eyebrow, or other expression, then I'd be editing a lot of files afterwards. Even if I got into a good rhythm, that'd still be a lot. Obviously, if I wanted to shell out 300 or so dollars, Charat could make a Live2D model for me, but that's not what we're trying to accomplish here! We're making a PNG tuber that we don't need to control with a camera dang it!

Unfortunately, this also meant that Za-chan's little "ZA" hairpiece was probably going to be left out, and her stark contrasting streaks of hair would be absent, but, I think I got a good approximation of her with the chibi editor:

I took some liberty with her outfit. The original Za-chan just has a simple collar and blue shirt. But I always like the half off shoulder lazy type of look. With a touch of cuteness from the bow, and a bit of tomboyishness from her cutoff shorts and poofy jacket, little Za-chan was ready to be exported! Also, if I keep using OBS, or even if I don't, I could make a small image of her hair clip and overlay it in OBS itself maybe.

Either way, Za-chan is ready for her chibi debut!

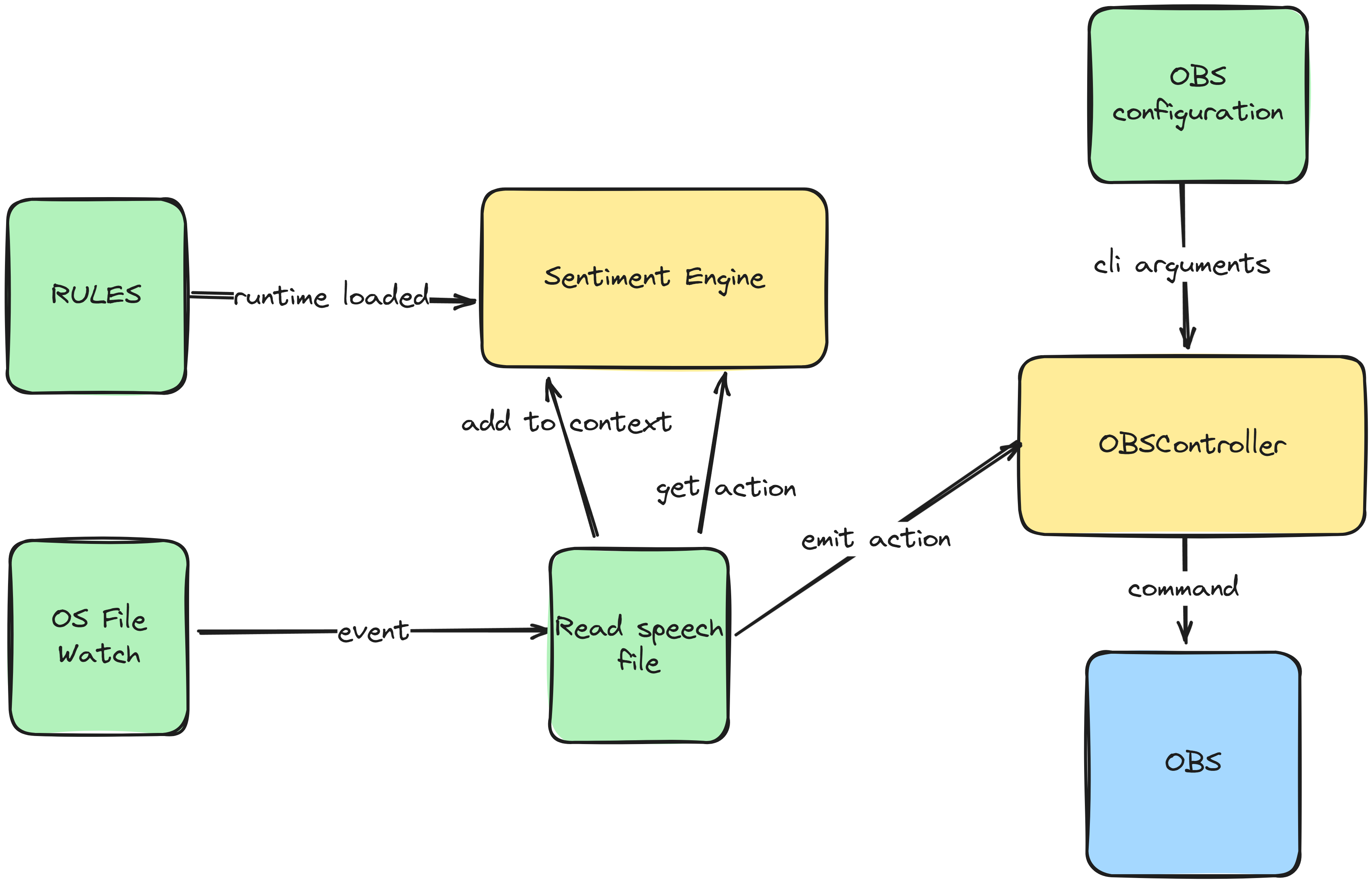

CLI tool to help me define sentiment rules ↩

Now, here's the hard part. I can tweak her mouth, eyes, eyebrows, and other bits as much as I'd like and save the files. But... how do we figure out which image to show? Well, we've got our rules that let us:

- Select an imaged based on word(s)

- Select an image based on relationships between sentiment flags of negative, neutral, and positive

- Select an image based on strict ranges of any combination of negative, neutral, and positive sentiments

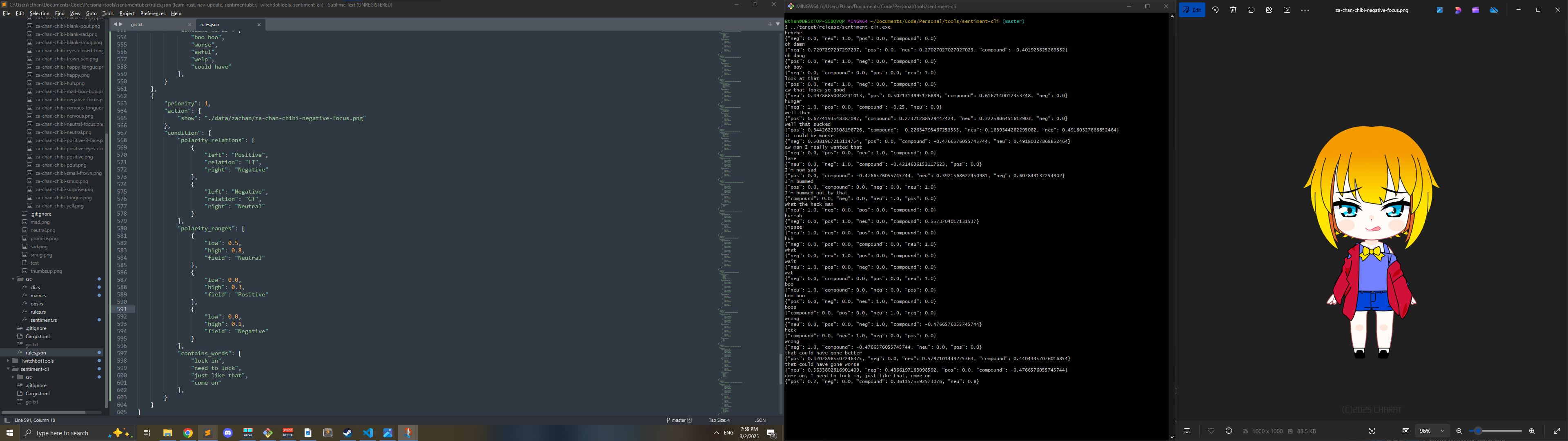

But, well, I don't really know what values correspond. So. I figured I'd do a simple experiment! Let's look at each picture of Za-chan and come up with a sentence or two I might be saying when I'd want her to be shown, and then run it through the analysis! And since I've made quite a few images, let's write a little program to do this for us quickly. Since we've already got the ongoing sentiment tuber program we're using, let's quickly use that as a base:

cp -r sentimentuber sentiment-cli cd sentiment-cli/ rm -rf data/ rm rules.json rm src/obs.rs src/rules.rs src/sentiment.rs

Next, I'll tweak the clap object so that it can either open up a file to read line by line for input, or just have it take the input from the terminal for quick feedback.

use std::path::PathBuf;

use clap::Parser;

#[derive(Debug, Parser)]

#[command(version, about, long_about = None)]

pub struct Config {

#[arg(short = 'f', long = "file")]

pub file_input: Option<PathBuf>,

#[arg(short = 'i', long = "in")]

pub input: Option<String>,

}

impl Config {

pub fn parse_env() -> Config {

Config::parse()

}

}

Simple enough, and then our main program (the only other file we have left in the project) becomes this:

mod cli;

use cli::Config;

use std::fs;

fn main() {

let config = Config::parse_env();

let analyzer = vader_sentiment::SentimentIntensityAnalyzer::new();

if let Some(sentence) = config.input {

print_sentiment(&sentence, &analyzer);

}

if let Some(handle_name) = config.file_input {

let file = fs::read_to_string(handle_name).expect("Could not read file");

file.lines().for_each(|line| {

print_sentiment(&line, &analyzer);

});

}

}

fn print_sentiment(sentence: &str, analyzer: &vader_sentiment::SentimentIntensityAnalyzer) {

let scores = analyzer.polarity_scores(sentence);

println!("{:?}", scores);

}

Straight forward and easy to use, let's see what we get with regular input after a quick cargo build --release

$ ../target/release/sentiment-cli.exe -i "Hey everyone, welcome to the stream"

{"neg": 0.0, "compound": 0.4588314677411235, "neu": 0.625, "pos": 0.375}

And with multiple sentences in a file?

echo "It's wednesday! I hope everyone is excited to see more Xenoblade.

Last time I was really mad about that stupid boss.

But it's all okay now." > text.txt

$ ../target/release/sentiment-cli.exe -f text.txt

{"neu": 0.5885815185403179, "neg": 0.0, "compound": 0.6800096092036356, "pos": 0.4114184814596822}

{"pos": 0.0, "compound": -0.7649686210234002, "neu": 0.5147058823529412, "neg": 0.4852941176470588}

{"neu": 0.6299212598425197, "compound": 0.3291459381423852, "neg": 0.0, "pos": 0.3700787401574803}

Great. So we've got an easy way to start getting a feedback loop, now the part that I've been procrastinating

from by writing this section. Actually sitting down and doing the work. So... let's procrastinate one more

time by realizing that writing a file and then triggering terminal commands isn't as fun as just typing into

the terminal and hitting enter. Sounds like a job for stdin()! Luckily for me, there's a really

simple example in the rust docs and so we get

this:

use std::io;

fn main() -> io::Result<()> {

let analyzer = vader_sentiment::SentimentIntensityAnalyzer::new();

let mut buffer = String::new();

loop {

io::stdin().read_line(&mut buffer)?;

buffer.pop();

if buffer == "exit" || buffer == "\u{4}"{

break;

}

print_sentiment(&buffer, &analyzer);

buffer.clear();

}

Ok(())

}

fn print_sentiment(sentence: &str, analyzer: &vader_sentiment::SentimentIntensityAnalyzer) {

let scores = analyzer.polarity_scores(sentence);

println!("{:?}", scores);

}

Now I can type on the terminal and either type just exit or CTRL+D to get out of the program. We've even dropped the dependency on clap so the program is tiny now too. Or well, as tiny as the vader sentiment will let me be. Anyway, now I really can't stop procrastinating and need to write some rules. Once I finished that, I'll be back to start in on the next section of this blog post.

Now that I've written a bunch of rules with ranges and relationships, I suppose I'll just keep refining things until stuff triggers when I want it to. An idea that comes to mind is that, since I picked everything pretty arbitrarily, that I might want to maybe visualize or graph the ranges I made in some way. I probably have plenty of overlap after all. But before I go down spreadsheet lane, I tried it out for a half hour or so and, while it wasn't perfect, It did work surprisingly well. So, in the interest of my sanity, I'm going to not try to tweak the rules more right now.

What to do if I don't talk for a while? ↩

That said, while working on it, I noticed that the avatar definitely gets "stuck" in the last position fairly often. Most likely because if I'm not talking, there's nothing to "tick" as it were and restore us to the neutral position. On top of that, the default image if no rule matches is hardcoded, so... let's fix that too while we're here before we move on.

First up, lets update the CLI object since we need to take the default in somewhere

use clap::Parser;

#[derive(Debug, Parser)]

#[command(version, about, long_about = None)]

pub struct Config {

...

/// Default show action for the sentiment if no rule matches

#[arg(short = 'a', long = "action_default")]

pub default_action: String,

...

}

Then of course, we need to use it somewhere. So, in the main method when we're doing some setup we can just move the string into the right type of object.

let default_action = SentimentAction {

show: config.default_action.clone()

};

The design choice next is if we have use a method like set_default_action after we've

created the SentimentEngine or if we should pass it along to the constructor. For me,

I didn't really want to pass anything but the closure in as far as the interface went, but also, I

don't think we can actually have any reasonable default, so we need to send it along in the constructor

since an empty string is asking us to just explode later on if a user (or me in the future) forget to

call that set method. An easy mistake, so, let's update the constructor and its call site:

// In main

let mut polarity_engine = SentimentEngine::new(default_action, |sentence| {

get_context_polarity(sentence, &analyzer)

});

// In sentiment.rs

pub struct SentimentEngine<PolarityClosure>

where

PolarityClosure: Fn(&str) -> ContextPolarity

{

text_context: VecDeque<(Instant, String)>,

current_context: String,

rules: Vec<SentimentRule>,

polarity_closure: PolarityClosure,

context_retention_seconds: u64,

default_action: SentimentAction

}

impl<PolarityClosure> SentimentEngine<PolarityClosure>

where

PolarityClosure: Fn(&str) -> ContextPolarity

{

pub fn new(default_action: SentimentAction, polarity_closure: PolarityClosure) -> Self {

SentimentEngine {

text_context: VecDeque::new(),

current_context: String::new(),

rules: Vec::new(),

polarity_closure,

context_retention_seconds: 10,

default_action

}

}

...

}

and of course, the last step is to change the hardcoded value over to the dynamic one now. So, this:

pub fn get_action(&self) -> SentimentAction {

let polarity = self.get_polarity();

let maybe_action = self.rules.iter().find(|&rule| {

rule.applies_to(&self.current_context, &polarity)

});

match maybe_action {

Some(rule_based_action) => rule_based_action.action.clone(),

None => SentimentAction {

show: "./data/zachan/za-chan-chibi-positive.png".to_string()

}

}

}

Becomes

pub fn get_action(&self) -> SentimentAction {

let polarity = self.get_polarity();

let maybe_action = self.rules.iter().find(|&rule| {

rule.applies_to(&self.current_context, &polarity)

});

match maybe_action {

Some(rule_based_action) => rule_based_action.action.clone(),

None => self.default_action.clone()

}

}

Easy. Now, what about the fact that if I go silent for a while, we're stuck on whatever the last image being shown was? Well, the simplest solution is to just start up a new thread and tick every 10 seconds or so. Since the notify sender emits a vector of debounced events we can just pass along an empty one of those:

let (sender, receiver) = mpsc::channel();

... use sender.clone() for the debouncer's producter.

thread::spawn(move || {

loop {

let sleep_for_seconds = Duration::from_secs(config.context_retention_seconds);

thread::sleep(sleep_for_seconds);

let _ = sender.send(Ok(Vec::new()));

}

});

Because we don't use the data in the event and just take an action when an event comes in, this will work just fine. As a reminder, the receiving code looks like this:

for res in receiver {

match res {

Ok(_) => {

let new_context = fs::read_to_string(path).expect("could not get text data from file shared with localvocal");

polarity_engine.add_context(new_context);

let sentiment_action = polarity_engine.get_action();

obs_sender.send(sentiment_action).unwrap();

}

Err(e) => {

eprintln!("watch error: {:?}", e);

}

};

}

Searching around on the internet shows that there's a ton of various ways to do this. Tokio has some

polling utilities, theres entire crates dedicated to the idea of run_later and similar.

But, looking at my task manager, it doesn't seem like just doing the simple sleep approach on an extra

thread is causing any spin locking or CPU spikes, so I'm going to roll with this for now.

The only issue of course is that the file we're loading the text from isn't updated at all. So, we'll just keep sending the most recent context over and over again, which would result in, you guessed it, the same image being shown at all times. So, how do we fix that?

Well, we could take advantage of the fact that the receiver has an Err path and intentionally send an error along, then in the error handler, we add an empty string to the context and emit the action event to the OBS channel. This would work, though it feels a bit hacky. This would look something like this:

// Tick every retention seconds to make sure we update the image even if we're not actively talking

thread::spawn(move || {

loop {

let sleep_for_seconds = Duration::from_secs(config.context_retention_seconds);

thread::sleep(sleep_for_seconds);

let _ = sender.send(Err(notify::Error::new(notify::ErrorKind::Generic("TickHack".to_string()))));

}

});

// Blocks forever

for res in receiver {

match res {

Ok(_) => {

let new_context = fs::read_to_string(path).expect("could not get text data from file shared with localvocal");

polarity_engine.add_context(new_context);

let sentiment_action = polarity_engine.get_action();

obs_sender.send(sentiment_action).unwrap();

}

Err(e) => {

polarity_engine.add_context(String::new());

let sentiment_action = polarity_engine.get_action();

obs_sender.send(sentiment_action).unwrap();

eprintln!("watch error: {:?}", e);

}

};

}

But... I feel like there's a better way. We could pretty easily define a new channel and take in this

type and then output a new type right? Then also let the receiever or the new channel be used by our

ticking mechanism as well, right? Basically, I want to define a map method like in the usual

functional constructs. Or well, if I knew how to nicely do that, I would but uh...

Right now we've got the debounced events coming in on the receiver, and the tick coming in on another sender. If we tried to just copy things in to try to add context to the polarity engine based on both of the recievers, then we'd run into a problem when we wrote this:

let (tick_sender, tick_receiver) = mpsc::channel();

thread::spawn(move || {

loop {

let sleep_for_seconds = Duration::from_secs(config.context_retention_seconds);

thread::sleep(sleep_for_seconds);

let _ = tick_sender.send(());

}

});

thread::spawn(move || {

for _ in tick_receiver {

polarity_engine.add_context(String::new());

let sentiment_action = polarity_engine.get_action();

obs_sender.send(default_action.clone()).unwrap();

}

});

Where we get the error error[E0382]: borrow of moved value: `polarity_engine` because we'd be

trying to use the polarity engine in two places and that won't fly since the move to a new thread

will shift things around a bit. It'd be overly complicated to add a mutex onto the engine, so instead let's

approach this from a better angle: the input to the engine!

... obs sender/receiver setup the same...

let (sender, receiver) = mpsc::channel();

let (context_sender, context_receiver) = mpsc::channel();

let tick_sender = context_sender.clone();

let tick_sender = context_sender.clone();

thread::spawn(move || {

loop {

let sleep_for_seconds = Duration::from_secs(config.context_retention_seconds);

thread::sleep(sleep_for_seconds);

let _ = tick_sender.send(String::new()); // <-- we send strings now

}

});

...

thread::spawn(move || {

for new_context in context_receiver {

polarity_engine.add_context(new_context);

let sentiment_action = polarity_engine.get_action();

if let Err(send_error) = obs_sender.send(sentiment_action) {

eprintln!("{:?}", send_error);

}

}

});

...

for res in receiver {

match res {

Ok(_) => {

match fs::read_to_string(path) {

Ok(new_context) => {

if let Err(send_error) = context_sender.send(new_context) {

eprintln!("{:?}", send_error);

}

},

Err(_) => {

eprintln!("could not get text data from file shared with localvocal")

}

}

}

Err(e) => {

eprintln!("watch error: {:?}", e);

}

};

}

For that last part, since I stopped using .expect to test how resilient swapping around

images being sent to OBS was, I unwrapped the matches. If we wanted, we could write something like this:

res.map_err(|e| {

format!("Watch error {:?}", e)

}).and_then(|_| {

fs::read_to_string(path).map_err(|_| {

"could not get text data from file shared with localvocal".into()

})

}).and_then(|new_context| {

context_sender.send(new_context).map_err(|send_err| {

format!("Watch error {:?}", send_err)

})

}).unwrap_or_else(|err| {

eprintln!("{:?}", err)

});

But, and I can't quite place my finger on why, but, it just sort of doesn't feel right with the rest of the code. Even though unwrapping a result in a result normally suggest to me that I should tweak things if possible. But, eh. I think I can write it nicely with an early continue within the loop instead and it will satisfy the part of my brain yelling at me, so:

for res in receiver {

if let Err(error) = res {

eprintln!("Recv error {error:?}");

continue;

}

match fs::read_to_string(path) {

Err(file_error) => {

eprintln!("could not get text data from file shared with localvocal: {file_error:?}");

continue;

},

Ok(new_context) => {

if let Err(send_error) = context_sender.send(new_context) {

eprintln!("{:?}", send_error);

}

}

}

}

That feels better. Anyway, code style aside, we now have a common type between the receiver block for

the file watch and the tick and so the polarity engine can exist off in its isolated thread and process

the updates it sends them. This is lovely, besides the fact that all of you are probably screaming at me

that since I can do for res in receiever I can probably also just get an iterator

and map on that to change the type of the receiver, that doesn't really change our need

to have that layer of indirection to get the shared type on the channel. And we need a shared input type,

not an output one.

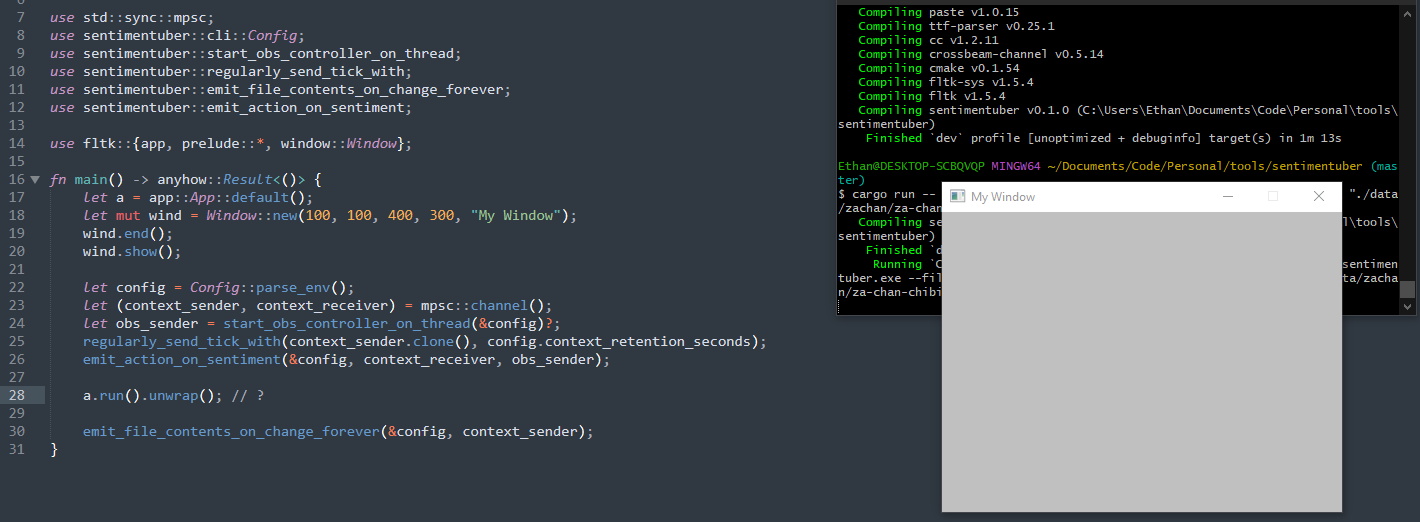

Anyway, besides some small refactorings to move the thread spawning code around and shorten up the main method from the mess we've made, I think we're done with the tweaking for the avatar creation and setup to ensure it's showing something relatively sensible at all times. Let's move on because after shuffling things into helpers, my main method now looks like this:

use std::sync::mpsc;

use sentimentuber::cli::Config;

use sentimentuber::start_obs_controller_on_thread;

use sentimentuber::regularly_send_tick_with;

use sentimentuber::emit_file_contents_on_change_forever;

use sentimentuber::emit_action_on_sentiment;

fn main() -> anyhow::Result<()> {

let config = Config::parse_env();

let (context_sender, context_receiver) = mpsc::channel();

let obs_sender = start_obs_controller_on_thread(&config)?;

regularly_send_tick_with(context_sender.clone(), config.context_retention_seconds);

emit_action_on_sentiment(&config, context_receiver, obs_sender);

emit_file_contents_on_change_forever(&config, context_sender);

}

The only thing I want to note is that the main method isn't returning OK(())

anymore because I used the never aka ! as the return type of the last method:

pub fn emit_file_contents_on_change_forever(config: &Config, context_sender: Sender<String>) -> ! {

This is because that code never returns control to the main method, so if you were to put in something after that call, the rust compiler will happily tell you you're being silly like so:

warning: unreachable expression --> sentimentuber\src/main.rs:21:5 | 20 | emit_file_contents_on_change_forever(&config, context_sender); | ------------------------------------------------------------- any code following this expression is unreachable 21 | Ok((())) | ^^^^^^^^ unreachable expression

Which is a pretty nice feature of the compiler to let a programmer know that there's, effectively, an infinite loop in the program. Which is how I want it, we just do a CTLR+C to quit the program when we're done with the app.

Swapping from OBS to a GUI ↩

So, the last thing I want to dig into is probably going to take a lot more time than fiddling with rules did. In the last post I talked about how we could remove OBS from the picture here and try out using a GUI instead and then have OBS just capture that instead. One bonus about this is that I could probably also show the sentiment values on the screen if desired which might assist me to continue to tweak things based on those sentiment values. One (potential) downside is that depending on the library I choose, it might be harder to show an animated gif versus the static PNGs I made with the avatar maker site. But, until I stop procrastinating and actually try something out, I'll never know.

I was thinking about using egui or nannou in the last post, but I stumbled across this post and the user nahsheef recommended fltk which looked kind of nice. It's not an immediate mode GUI, but it seems nice to use, so I'm going to try to use it. First, updating the cargo file:

... [dependencies] ... fltk = "^1.5"

Then aquick cargo build to download and install everything, I tossed in some of the sample code to my main method and

We have a window! The curious thing we need to figure out of course is how to deal with the two different loops we've got going on now. Since I imagine we can't block the main thread with the context receiving and expecting the GUI to work properly. But... let's find out?

Showing an image in fltk framework seems straightforward:

let a = app::App::default();

app::background(0,255,0);

let mut wind = Window::new(100, 100, 500, 500, "PNGTuber");

let mut frame = Frame::default().with_size(500, 500).center_of(&wind);

let path = Path::new(&config.default_action);

let absolute = Path::canonicalize(path).expect("default file is not found");

let image = SharedImage::load(

absolute

).unwrap();

frame.set_image(Some(image));

wind.make_resizable(true);

wind.end();

wind.show();

a.run().unwrap();

Note that until I added a frame the image didn't actually appear. But, since my images are 1000x1000 and I'm not scaling them, we get a slightly zoomed in view of the 500 pixels in the center:

So this is a great start, and to get to the same level of operation as the OBS code, we need to make the image change. Remember that roughly the OBS code was doing this:

But now we have an event loop of the GUI window running. So, let's look into that a.run().unwrap()

method real quick. That's just blocking forever and running the GUI's event loop under the hood. But,

the docs note that we can implement

the loop ourselves with wait

use fltk::*;

fn main() {

let app = app::App::default();

while app.wait() {

// handle events

}

}

But, even better, if we look at the chapter on

state management, there's a pretty good example of creating our own App struct to define the type of the event

channel we care about. So, let's try to implement that really quick with a channel of type SentimentAction

so that we can hook the context messages up directly to the GUI event loop so it can set the image as desired.

First up, the struct:

pub struct MyApp {

app: app::App,

main_win: window::Window,

frame: frame::Frame,

receiver: Receiver<SentimentAction>,

pub sender: Sender<SentimentAction>

}

This is basically the exact same as the state management chapter but they've got a "count" variable for a number instead of a receiver and sender to expose to the rest of the program. Next up, the initialization of the app is all pretty much the same:

impl MyApp {

pub fn new(default_image_path: &str) -> Self {

let app = app::App::default();

app::background(0,255,0);

let mut main_win = window::Window::default().with_size(500, 500);

let col = group::Flex::default()

.with_size(500, 500)

.column()

.center_of_parent();

let mut frame = frame::Frame::default().with_label("PNGTuber");

// TODO move this to a helper method

let path = Path::new(&default_image_path);

let absolute = Path::canonicalize(path).expect("weird");

let image = SharedImage::load(

absolute

).unwrap(); // TODO

frame.set_image(Some(image));

col.end();

main_win.end();

main_win.show();

...

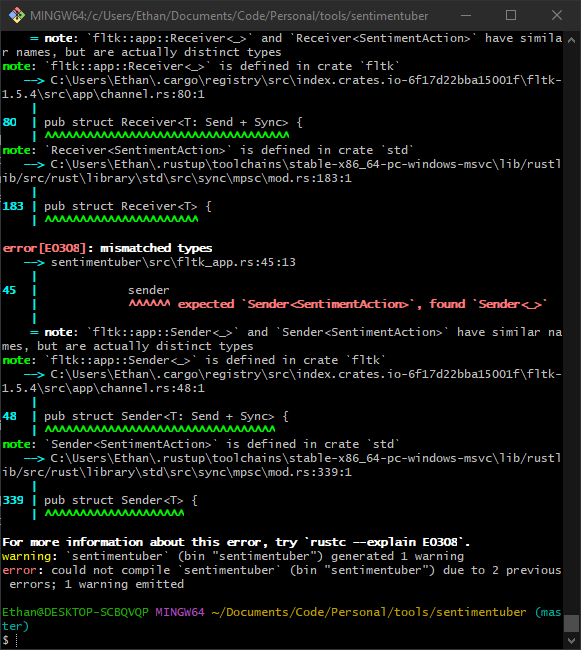

But then I ran into an interesting problem, illustrated for you by the comment here

...

// let (sender, receiver) = app::channel(); <-- this is the original line from the docs

let (sender, receiver) = std::sync::mpsc::channel();

Self {

app,

main_win,

frame,

receiver,

sender

}

}

And this error message:

So, the app channel is a different kind of sender than I need. Figuring this shouldn't really be too much of a problem because all I really need is to hook into that wait loop, I moved ahead using the standard library channel and setup the run method like the documentation showed:

pub fn run(mut self) {

while self.app.wait() {

if let Ok(sentiment_action) = self.receiver.try_recv() {

println!("{:?}", sentiment_action);

// TODO move to helper lib method or similar

let path = Path::new(&sentiment_action.show);

let absolute = Path::canonicalize(path).expect("weird");

let image = SharedImage::load(

absolute

).unwrap(); // TODO

self.frame.set_image(Some(image));

self.app.redraw();

}

}

}

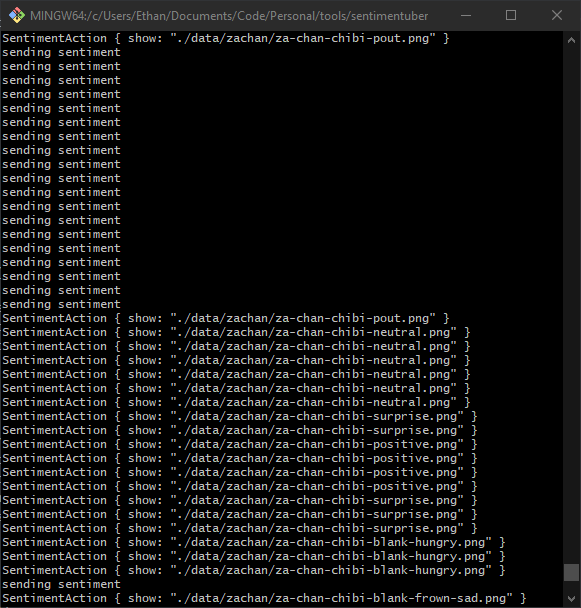

And then ran up my program and editted the text file a few times to generate events while debug logging:

See how there's a bunch of "sending sentiment" and then a whole bunch of SentimentAction?

It seems like by not being hooked into the app's channels that the library is setting up, I'm not really

getting any sort of responsiveness 1. And indeed, I did a bunch of different edits, sentences, and all sorts

of things over the course of an entire minute before those actions started popped up in the loop all at once.

So it feels like the fact that this library is a retained GUI and I'm not hooked into it properly is biting me. What's a guy to do? Well, either I can spend a few more hours trying out different examples and trying to understand why one Sender isn't actually the same as the other and how to messily glue them all together, or maybe I could pivot back to my original plan and use an immediate GUI that should be simpler because there's no callbacks or other similar things. So... let's pivot because while I liked how fast I got up and running, I don't really want to start experimenting with interior mutability and arc mutexes right now.

So, back to egui! Let's see how quickly we can get to the same place we were with fltk.

cargo add eframe cargo add egui_extras

Then tweaking the cargo file so that we're able to load png images since that's what I'm using:

eframe = "0.31.1"

egui_extras = {version = "0.31.1", features = ["all_loaders"]}

image = { version = "*", features = ["png"] }

And if I just mash and crash the image example and some stackoverflow + searching around and I arrive at this somewhat working code:

use eframe::egui;

use egui_extras::image::RetainedImage;

use std::path::Path;

fn load_image_from_path(path: &str) -> Option<RetainedImage> {

let img = RetainedImage::from_image_bytes(path, std::fs::read(path).ok()?.as_slice()).ok()?;

Some(img)

}

struct MyApp {

image: Option<RetainedImage>,

}

impl MyApp {

fn new(path: &str) -> Self {

let image = load_image_from_path(path);

Self { image }

}

}

impl eframe::App for MyApp {

fn update(&mut self, ctx: &egui::Context, _frame: &mut eframe::Frame) {

egui::CentralPanel::default().show(ctx, |ui| {

if let Some(image) = &self.image {

image.show(ui);

} else {

ui.label("Failed to load image.");

}

});

}

}

... in main ...

let my_image = config.default_action.clone();

let options = eframe::NativeOptions::default();

eframe::run_native(

"Image Viewer",

options,

Box::new(|_cc| Ok(Box::new(MyApp::new(&my_image)))),

);

and then, ignoring that apparently RetainedImage is potentially deprecated, I see that I can see Za-chan peaking at us from the corner.

So how do I get her to be centered like before? And while I'm at it, let's swap to using

a nondeprecated image struct. Well, removing the RetainedImage and moving to using file

URI and the ui.image method seems to do some scaling automatically:

fn get_full_path(relative_path: &str) -> Result<String, std::io::Error> {

let path = Path::new(relative_path);

let path_buf = Path::canonicalize(path)?;

Ok(path_buf.display().to_string())

}

struct MyApp {

current_file_path: String,

new_image_receiver: mpsc::Receiver<SentimentAction>,

new_image_sender: mpsc::Sender<SentimentAction>

}

impl MyApp {

fn new(path: String) -> Self {

let (new_image_sender, new_image_receiver) = mpsc::channel();

Self {

current_file_path: path,

new_image_sender,

new_image_receiver

}

}

}

impl eframe::App for MyApp {

fn update(&mut self, ctx: &egui::Context, _frame: &mut eframe::Frame) {

if let Ok(new_action) = self.new_image_receiver.try_recv() {

if let Ok(new_file) = get_full_path(&new_action.show) {

self.current_file_path = new_file;

}

}

egui_extras::install_image_loaders(&ctx);

egui::CentralPanel::default().show(ctx, |ui| {

ui.image(format!("file://{0}", self.current_file_path));

});

}

}

But... for some reason, the file watch has stopped working! I can see my ticking at a regular

interval working as expected but for some reason things aren't behaving. After some head scratching,

I took another look at what I had done to have the eframe app become the thing returned by the main

method, rather than having the infinite loop on the context receiving from emit_file_contents_on_change_forever

pub fn emit_file_contents_on_change_forever(config: Config, context_sender: Sender<String>) {

let (sender, receiver) = mpsc::channel();

let mut debouncer = new_debouncer(Duration::from_millis(config.event_debouncing_duration_ms), sender).unwrap();

let path = config.input_text_file_path.as_path();

debouncer.watcher().watch(path, RecursiveMode::Recursive).unwrap();

eprintln!("I'll start watching the file now {0}", path.display());

thread::spawn(move || {

for res in receiver {

println!("????");

...

}

});

}

As you can see, I spawn a new thread and start up the infinite loop in the background. As a shot in the dark, I tried moving the entire setup of both the loop and the watcher itself into the thread:

pub fn emit_file_contents_on_change_forever(config: Config, context_sender: Sender<String>) {

thread::spawn(move || {

let (sender, receiver) = mpsc::channel();

let mut debouncer = new_debouncer(Duration::from_millis(config.event_debouncing_duration_ms), sender).unwrap();

let path = config.input_text_file_path.as_path();

debouncer.watcher().watch(path, RecursiveMode::Recursive).unwrap();

eprintln!("I'll start watching the file now {0}", path.display());

for res in receiver {

println!("????");

...

}

});

}

And it suddenly started working again! I'm not really sure why, since I was splitting the creation of the sender and receiving end of channels across threads in other places (like the OBS startup method!), but doing this made everything start behaving again. So if you know why this particular thing decided to stop behaving, I'd love to know.

Anyway, after I got this working, I could see the file change events appearing in the console. And yet... the image that egui was showing me wasn't changing unless I moused over or brought focus back to the window. Looking around, I found that this github issue said that egui should be working fine on windows 10. But... no dice, unless I moused over it didn't update! I did try to be clever and request an update whenever an event happened so that we'd only request the update when we really needed to, like this:

impl eframe::App for MyApp {

fn update(&mut self, ctx: &egui::Context, _frame: &mut eframe::Frame) {

if let Ok(new_action) = self.new_image_receiver.try_recv() {

if let Ok(new_file) = get_full_path(&new_action.show) {

self.current_file_path = new_file;

// doesn't actually work!

ctx.request_repaint();

}

}

egui_extras::install_image_loaders(&ctx);

egui::CentralPanel::default().show(ctx, |ui| {

ui.image(format!("file://{0}", self.current_file_path));

});

}

}

But, when I did some edits to the file, saw the events come through, it wasn't until I hovered or focused the

window that I'd see any changes and if I println'd the request_repaint I'd only see it showing up

when I did.

Some more reading later and I saw a recommendation

to put the request_repaint call into the main loop. So I tried that:

impl eframe::App for MyApp {

fn update(&mut self, ctx: &egui::Context, _frame: &mut eframe::Frame) {

// Note that if we DONT request a repaint after some time,

// then we'll never see any repaints unless we hover over the window

// with our mouse or similar. Since we want this to change in the

// background so that OBS can capture it, we need to do this.

// Requesting 1s from now is better than calling request_paint()

// every frame and results in CPU% of like 0.00001 versus 2.7% or so

ctx.request_repaint_after(std::time::Duration::from_secs(1));

if let Ok(new_action) = self.new_image_receiver.try_recv() {

if let Ok(new_file) = get_full_path(&new_action.show) {

self.current_file_path = new_file;

// This may not be neccesary, but also know that it never

// worked to get around the issue that the request_repaint_after

// is solving above.

ctx.request_repaint();

}

}

egui_extras::install_image_loaders(&ctx);

egui::CentralPanel::default().show(ctx, |ui| {

ui.image(format!("file://{0}", self.current_file_path));

});

}

}

And as you can see by the comment I wrote in this worked! And then I tweaked it to change to the timed version of the method so that I didn't eat too much CPU. I was watching the program in my task manager and it went from hovering at a bit over 2% to 0% almost always.

So now, we just need to tweak some options and settings and we should have what we had with fltk, but with an actual updating UI! Let's make the image background green so that it's easy to filter out via greenscreen on OBS so I don't have to dig into the (I assume hellish) world of getting the window to actually be transparent:

egui::CentralPanel::default().show(ctx, |ui| {

let image = egui::Image::from_uri(format!("file://{0}", self.current_file_path)).bg_fill(egui::Color32::GREEN);

ui.add(image);

});

We had to swap from ui.image to using ui.add because the call to image

returns a Response, and not an Image that we can actually call methods on for stuff like the fill and sizing.

I'm not exactly sure how to get the image to not scale like it did in fltk but I think

I can figure that out another day, or honestly just ignore it considering I'll be resizing the avatar

in OBS anyway.

So, I'll move this over to its own file, gui.rs and our final code is:

use eframe::egui;

use std::sync::mpsc;

use crate::rules::SentimentAction;

use crate::get_full_path;

pub struct AvatarGreenScreen {

current_file_path: String,

new_image_receiver: mpsc::Receiver<SentimentAction>,

pub new_image_sender: mpsc::Sender<SentimentAction>

}

impl AvatarGreenScreen {

pub fn new(path: String) -> Self {

let (new_image_sender, new_image_receiver) = mpsc::channel();

Self {

current_file_path: path,

new_image_sender,

new_image_receiver

}

}

}

impl eframe::App for AvatarGreenScreen {

fn update(&mut self, ctx: &egui::Context, _frame: &mut eframe::Frame) {

ctx.request_repaint_after(std::time::Duration::from_secs(1));

if let Ok(new_action) = self.new_image_receiver.try_recv() {

if let Ok(new_file) = get_full_path(&new_action.show) {

self.current_file_path = new_file;

// This may not be neccesary, but also know that it never

// worked to get around the issue that the request_repaint_after

// is solving above.

ctx.request_repaint();

}

}

egui_extras::install_image_loaders(&ctx);

egui::CentralPanel::default().show(ctx, |ui| {

let image = egui::Image::from_uri(format!("file://{0}", self.current_file_path)).bg_fill(egui::Color32::GREEN);

ui.add(image);

});

}

}

And in the main.rs file:

use eframe::egui;

use std::sync::mpsc;

use sentimentuber::cli::Config;

use sentimentuber::regularly_send_tick_with;

use sentimentuber::emit_file_contents_on_change_forever;

use sentimentuber::emit_action_on_sentiment;

use sentimentuber::get_full_path;

use sentimentuber::gui::AvatarGreenScreen;

fn main() -> Result<(), Box<dyn std::error::Error>> {

let config = Config::parse_env();

let (context_sender, context_receiver) = mpsc::channel();

let starting_image = get_full_path(&config.default_action)?;

let app = AvatarGreenScreen::new(starting_image);

let to_gui_sender = app.new_image_sender.clone();

regularly_send_tick_with(context_sender.clone(), config.context_retention_seconds);

emit_file_contents_on_change_forever(config.clone(), context_sender);

emit_action_on_sentiment(&config, context_receiver, to_gui_sender);

let options = eframe::NativeOptions {

viewport: egui::ViewportBuilder::default().with_inner_size([1000.0, 1000.0]),

..Default::default()

};

let handle = eframe::run_native(

"PNGTuber",

options,

Box::new(|_cc| Ok(Box::new(app))),

);

Ok(handle?)

}

I thought about moving the eframe and run_native call over to a method inside of the gui file, but considering it has to be ran by the main method and then loop forever, it feels better for it to sit inside of the main method. We're splitting hairs though, so let's move along.

Wrap up ↩

So, at the start of this adventure I was showing random screenshots by sending updates to an image source in OBS. At the end, we've got our chibi avatar, rules defined for when each should be shown, a background tick to ensure that we go back to the neutral default image when we're not talking, and lastly, we've disconnected the need to have OBS do anything besides capture a window we setup that takes up very little cpu.

Not bad.

The only thing that's still waiting in the wings, in my mind, before I call this little project ready to be used and tuned live is the fact that there's no mouth flap. Which isn't really a code change. I'll have to update the image loader feature to be able to load gifs, and then tweak the chibi images to have moving mouth flaps. This would get us a basic sort of animation for showing that we're talking, and then have the neutral position not have any movement so that at worst, each 10s tick we return back to not moving.

I think there's some other stuff we could do that would require code changes, such as taking in the incoming context string and estimating how long an animation should be "played" for and then do that. But I think we're stepping towards perhaps creating a small state machine for the avatar to keep those types of things sane. But I think that's an idea to explore in another blog post in the future.

Hope this was somewhat interesting for you all to read, and again, if you know what's up with that file watch + channel + thread::spawn thing not sending events out, swing by the stream and let me know!